KlobiGB - Overview

Some of you might have read my rather grandiose and ever so slightly over-dramatic announcement of things to come. Among other writings I promised to share my insignificant little Game Boy emulator and today I shall finally make good on that promise. Or at least begin to do so. Or tell you about intending to begin doing so at some later date, maybe. We’ll see.

What I definitely will do in this article, however, is explain why I deemed it an interesting side project to pursue and believe emulator development in general is awesome. Not only is the process fun and rewarding in and of itself and the product generally useful but the required study of intricate and obscure details deepens ones understanding and appreciation of hardware, abstraction and the conveniences of more common environments. Everyone should have written at least one emulator in their life. Furthermore I will give a brief overview of the device in question and break down which corresponding pieces the emulator consists of, details of all of which I shall elaborate on in future articles. Finally, in a short preview - not to leave you hanging without showing any code at all - I shall discuss how I represent the CPUs state, in particular its registers, and why I chose to do it the way I did.

Emulation and why emulate?

To many of my dear readers1 the topic of emulation is most likely an intimately familiar one, at least from a users perspective. Nonetheless, just to ensure we are all one the same page here, allow me to clarify and explain ever so briefly what we mean when we use the term and why it is both useful for consumers and culturally significant.2

There are many ways we could define what an emulator is, but essentially it all boils down to one computer system acting as if it were a different one. We know this is possible, as we know a great deal of computer science, thanks to the great Alan Turing and work he and others did in the 1930s. In particular he developed a seemingly simple model of computation now known as a Turing Machine that is nonetheless powerful enough to compute everything every modern and real machine can.3 All our real computational systems and most languages used to program them could be simulated on a Turing machine and can - to some extent - simulate a Turing machine. As such, they are essentially equivalent, except for such minor and entirely insignificant inconveniences as speed and memory.

So Babbage’s Analytical Engine could in theory compute the beautiful transformation from markdown to html necessary for this post which my brand new Thinkpad T590 is constantly repeating live as I am typing. I just should have finished writing at the time of its inception, i.e. about 200 years ago, if I intend to publish anytime soon.

More relevant than this grand exercise in futility and the area you, dear reader, are most likely to come in contact with such technology is in the realm of consumer electronics. If you played a game on Nintendo’s Virtual Console, you ran an emulator. If you own any of the recent fad of mini-consoles, like the NES Classic, Mega Drive Mini or PlayStation Classic, you ran an emulator.4 If you typed a command in a typical linux or mac terminal window, you ran an emulator.

You may have noticed that this last example is not quite like the others. Not only are we not talking about a game console, it is quite likely you have not even seen the original hardware emulated. I know I personally have never seen a VT100, for example5, and nonetheless many of the programs I use daily issue escape sequences originally supported by it and expect them to be interpreted correctly.6

Which brings me closer to the point I am trying to make here. A multitude of reasons exist which go beyond the obvious circumvention of pesky copy protection and hardware vendor lock in and I would like to quickly address just one I consider among the most pressing: hardware dies.

I know, it’s something I am struggling to come to terms with, too.7 I’ll give you a moment to get over the initial shock before I drop the next bomb and tell you that some even stops being produced. And it just gets worse: Tragically, not all software is open source, code can get lost too. Simply adjusting, rewriting and recompiling is not usually an option for proprietary products. I think we can all agree that most software, especially video games, should be considered a work of art and is worth preserving just as much as paintings, movies, television shows and music are. Future generations should be enabled to enjoy an authentic experience of what it was like to consume these products of creative endeavor. Emulating the devices they ran on is the most sustainable way to do so.

So as you can see, it is kind of essential for preserving our cultural inheritance that someone engages in emulator development. Yet why should that someone be me or even you, dear reader? Why can’t it be someone else?

Whilst asking that question is not, in general, a good idea and all of us are all too prone to do so, let’s engage with it for just another minute.8

Naturally, random people on the internet, specifically on reddit, have much better answers than I myself could provide alone. Specifically, they mentioned:

- All programming is practice and improves ones ability to program

- An emulator is a rather big project and as such teaches you about code management and systems design

- The skill set acquired is especially helpful when dealing with embedded systems, as you have to deal with many low-level details rarely encountered in other types of projects

- Even if direct exposure to those details is rare, the additional knowledge of internal workings of hardware can help make you a more careful and better programmer

Those are great arguments, yet are they sufficient? I don’t know. As so frequently, I offered up a long, somewhat plausible and elaborate explanation and justification that at best tells only half the story. It seems far more like a rationalization I constructed after the fact than a candid reflection of my motivations at the start of this project. My thoughts at the time can simply be summarized as “This is fun!” and I belief that alone is enough.

Brian Kernighan9 once so eloquently suggested:

“Do what you think is interesting, do something that you think is fun and worthwhile, because otherwise you won’t do it well anyway.”

I am trying to follow his advice.

The Game Boy and why the Game Boy?

If you have never heard of the Game Boy, I assume you must either be very very young - good for you then - or have been living under a rock for a few decades.10 It must have been a particularly remote and secluded rock so, considering this thing has been continuously produced for 14 years until 2003, is still among the three most sold video game consoles of all time even now and has been to wars and survived:11

Many iconic characters and incomparably successful franchises still very much alive today, about three decades later, got their debut on this incredible little device.

All of this undoubtedly makes the Game Boy historically significant and a really great device to own and use, yet does it also imply it’s a great choice to develop an emulator for?

As with many interesting questions, there is no simple binary answer. Rather, it depends. More concretely, it depends on your goals. If you desire to produce an unrivaled product that delivers unique benefits to its users and will set you apart from the crowd, it might not be the best of choices. At the time of writing github lists 184 repositories under the topic gameboy-emulator.

If you desire a really difficult, nigh-insurmountable challenge with little help along the way and are already quite well versed and experienced, it might not be the most ideal choice either. The internet offers a ton of excellent documentation about almost all relevant details gathered and published by wonderful people over the course of many years, the emudev reddit is filled with myriad questions and answers about details and frequent errors and a number of excellent test roms are available.

If conversely - like me - you are just starting out in this direction, the situation could hardly be more ideal. The Game Boy is a reasonably simple device whilst not being entirely trivial. It is easy enough to get something on the screen and even quickly get the satisfaction of some playable games. Nonetheless there are some almost inevitable pitfalls ensuring you learn something new on the way. If one gets bored and ambitious, the number of hours one can sink into getting the details exactly right, supporting more games and peripherals and improving performance is limited by life, not problems to solve. 12

And - of course - I have an unhealthy obsession with Tetris and the early Pokemon games. Developing an emulator proves a most convenient excuse to play them and consider it work ;-). It is simply immensely satisfying to see this displayed in a program of my own:

How?

With this grand prelude out of the way we finally know what it is we want to do and why we want to do it13, so we can at long last get started on how to go about it.

As I most likely have already bored you out of your mind with all my meaningless introductory babble, lets not dwell on abstract matters much longer and get down into the weeds and details of our concrete example. There is just one relevant fact you have to understand before we get started. Allow me to put it in the words of one of the greater tv shows of recent memory at a time when it was still good:14

What our emulator has to mimic is only the outwardly observable behavior of the original system, not its exact internal workings. So we can save ourselves the trouble of getting down to the level of individual logic gates, transistors or even the underlying physics. That is essentially the difference between emulation and simulation.15

In order to determine which parts of the original hardware’s behavior we should deem relevant and observable, we ought to consider what it is our program eventually does: It takes as input a digital copy of any given real game and presents to the user an approximation of the visuals and sound this program would have shown and played were it executed on a real Game Boy. Button presses in some form are forwarded to the emulator and the program responds by changing what it presents in approximately the same way it would have had the physical buttons been pressed. As such we have to be somewhat accurate with everything that is shown directly to the user(humans don’t necessarily notice or care about every pixel and millisecond) and really accurate with everything that can be seen by a program, i.e. everything an arbitrary instruction in a program can use to change its behavior. If one instruction stores the value 42 and another reads the stored value, it does not matter how this happens, but it had better read 42, not 41 or 43.

It might seem patently obvious, but this trivial observation can guide us when deciding what is relevant and what is not.

Take, for instance, the PPU(Picture Processing Unit), which is responsible for drawing graphics onto the screen. It is a really strange little beast and I will go into much more detail about it in a dedicated article. For now, suffice it to say that despite using an LCD, it acts as if the image was drawn by a CRT, that is gradually in scanlines. Cycle accurately emulating this behavior can be quite expensive and it might be reasonable to ignore that detail and instead draw the whole screen at once at the same time the last scanline would have finished. This acceleration would, however, yield erroneous results. A program can set a specific register and thereby request an interrupt when the drawing reaches a specified line. It can then react to this and change parameters of the drawing mid-screen. Many if not most games utilize this to great effect. As such, we have to at least do our drawing line by line.

On the other hand, doing it pixel by pixel is not strictly necessary. Whilst some nasty(or ingenious) games even modify settings during the drawing of a single line, there is - to my knowledge - no way for a program itself to detect its effect and the damage done by getting it wrong is rare and mostly limited to some minor visual artifacts, which is a trade-off I am willing to make.

However, instead of getting ahead of and losing ourselves in the details of any one part, we should first of all get an overview of what parts there are and what their respective job is. I already mentioned the PPU above. Including it, there are a bunch of systems running in parallel:

- CPU: The “central processing unit”, or processor. This is what is responsible for executing instructions.

- PPU: The “picture processing unit”, basically the systems graphics card. This is responsible for drawing pictures on the screen, hence the name.

- APU: The “audio processing unit”. You might have guessed it from its name, but the beautiful music of our games is played with this piece of hardware.

- DMA Controller: DMA stands for “direct memory access”. This controller can be used by programs to quickly transfer some memory without involving the CPU in the copying process.

- Timer: A timer counting up a register that can be configured to interrupt the CPU.

These pieces communicate via memory mapped registers. Aside from those, the 64 KB address space also contains ROM, RAM and potentially other things from the currently inserted cartridge, so we have to somehow emulate this memory mapping as well and route reads and writes to the correct subsystems.

That much information should be sufficient to begin working. Better and more detailed writing as well as great videos can - of course - be found online. I wholeheartedly recommend watching The Ultimate Game Boy Talk, it has been an invaluable resource for me.

Sharp LR35902

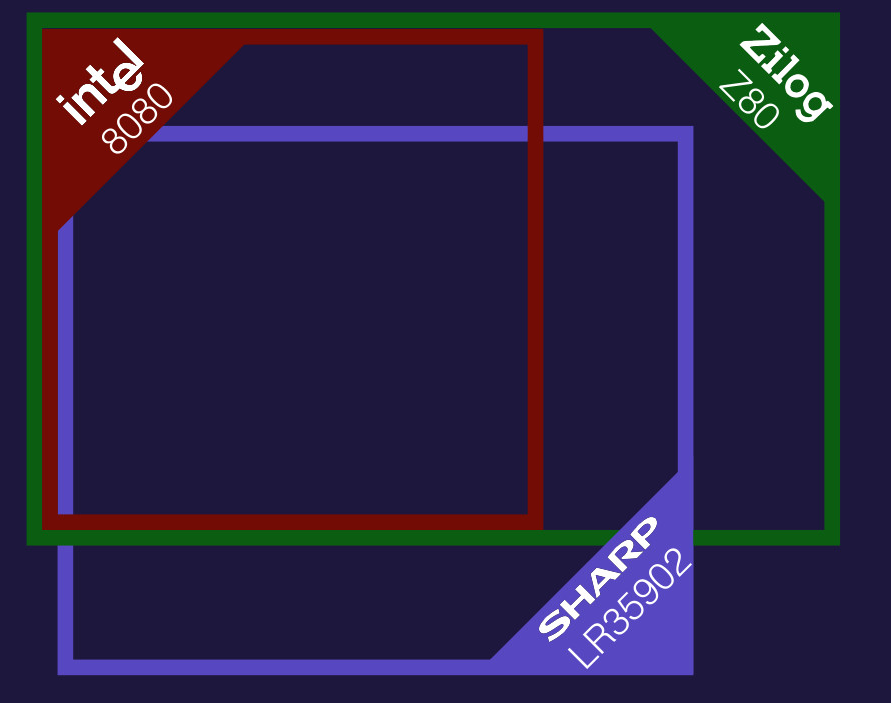

The first and central piece we shall take a closer look at and attempt implementing is the CPU. In the talk I mentioned above, Michael Steil utilized this beautiful visualization on his slides to describe it:

As you can see, it is somewhat of a mix between the more well known and frequently used Zilog Z80 and Intel 8080 processors with some slices cut away and some additional sugar sprinkled on top. It is an 8-bit processor, but uses a 16-bit address bus which grants it access to a 64KB big linear address space. As one would expect, it also has some quickly accessible storage in the form of registers. It sports the same set as the 8080. Here is an excerpt of the pandocs, describing them and their use:

16bit Hi Lo Name/Function

AF A - Accumulator & Flags

BC B C BC

DE D E DE

HL H L HL

SP - - Stack Pointer

PC - - Program Counter/Pointer

Let’s ignore the Stack Pointer, Program Counter and Flags for now and focus on the stuff in between, i.e. BC, DE and HL. Or should I rather say B, C, D, E, H, and L? Despite being mainly an 8-bit processor, the Sharp LR35902 supports some instructions working with 16 bit wide operands and for that purpose registers can be paired up and treated as one larger value. So B can be either a simple 8-bit value of itself or the most significant byte of the 16-bit register BC.

How exactly should such a construct be represented in code. This is, of course, language dependent, which is why at least a fleeting familiarity with my language of choice, C++, may be required to fully comprehend what is discussed below.16

As writes to a partial register have to modify the value of the combined one and vice-versa, we have to get slightly more sophisticated than separate variables of the appropriate bit width. Many, if not most, emulators chose to do something different for reasons I am going to outline in a moment, but with a cursory search, I found at least 7 that use something akin to the following:

union register16

{

std::uint16_t combined;

std::uint8_t parts[2];

};This seems, at first glance, like the simplest and most obvious solution. After all, multiple variables of different type sharing the same region of memory is exactly what unions are made for, isn’t it? Well, not exactly. This approach has two very significant drawbacks:

- It is undefined behavior.

- Even on compilers that specifically allow it, the exact behavior is machine dependent.

To understand the first point, the description above has to be amended by a few meaningful words: Multiple variables of different type sharing the same region of memory, only one of which is active at any given time, is what unions are made for. Whilst the members of a normal class type share (almost) the same lifetime, but live in different, non-overlapping (but adjacent) memory, the members of a union share the same memory, but use it at different, non-overlapping times. Writing to any member is ok and simply switches which is active, reading from an inactive member, however, is undefined behavior.17

We clearly cannot guarantee that whatever Game Boy code we execute happens to adhere to those rules. In fact, we can pretty much guarantee it does not, as building up the larger values by its parts is kind of how things were and frequently had to be handled on the device.

But what does that mean? What even is “undefined behavior”? I keep throwing this phrase around without any clarification. Well, dear reader, undefined behavior is exactly what it says on the tin. There are things with behavior not defined by the holy standard. Famous examples are dereferencing nullptr or accessing arrays out of bounds. The standard imposes no restrictions on what a compliant compiler is free to do if it encounters code committing such atrocities. It may set your computer on fire, launch nethack or - worst of all - do exactly what you expected it to. Until one day it doesn’t. It is clearly something any C++ programmer should avoid at all costs.18

“But wait”, I hear you say. “That is all fine and dandy for purists in a language lawyering class held in your cute little ivory tower, but out here, in the real world, we couldn’t care less about your theory. Compilers disagree. Look here, gcc specifically allows accessing inactive members, so why should I care?”

Fine. Point taken. If you know about the issue, know your compiler and know what you are doing, you can get away with it. Go ahead. This still leaves problem number 2 so: The exact results are machine dependent.

What do I mean by that? Quite simple: Endianness. Endianness is the ordering used to store multi-byte values in memory. Earlier, I described that B is the most significant byte in the 2-byte BC register. Whether this corresponds to parts[0] or parts[1] in the declaration above depends on the system we run on. On a big-endian system, which stores higher valued bytes at lower addresses, B is parts[0] and C is parts[1]. Little-endian systems, on the other hand, store lower value bytes at lower addresses and the parts would be reversed, B being parts[1] and C being parts[0].

If you know about the issue, know your compiler and your target architecture and know what you are doing, you can still get away with it, but the picture starts to look a little bleak by now. It might be worth considering alternatives.

What could be done instead? Specifics can vary and concrete solutions abound, but all of them basically fall into one of two categories: keep separate 8 bit values and combine them when needed or keep one 16 bit value and split it when needed. Specialize access to the whole or specialize access to the parts. My choice at the time was the latter, so in retrospect I have to admit it was rather arbitrary.19

In a solution not entirely unlike the proxy type used by std::vector’s bool specialization to reference individual bits and with all the same drawbacks making this so contentious, I decided to build a reference-like type to a single byte within a larger value:

class byte_proxy

{

public:

constexpr byte_proxy(std::uint16_t& target, unsigned shift) noexcept:

target_{target},

shift_{shift}

{}

constexpr operator std::uint8_t() const noexcept

{

return (target_>>shift_)&0xff;

}

constexpr byte_proxy& operator=(std::uint8_t val) noexcept

{

target_&=~((0xff)<<shift_);

target_|=(std::uint16_t{val}<<shift_);

return *this;

}

private:

std::uint16_t& target_;

unsigned shift_;

};This code is a bit simplified. The real one is a template to be more generic in its source and target type, contains some more casts to ensure we get the right types and values without any narrowing, integral promotions or sign extension getting in our way and overloads some more special members, like assignment from another byte_proxy and the compound assignments(i.e. +=, -= etc.). I deemed such details unimportant and distracting, so I decided on omitting them in service of accessibility.

The basic idea is rather trivial:

- It is constructed with a reference to a larger value and a starting position inside this value. A shift of 0 addresses the first, lowest valued byte, a shift of 8 addresses the next higher valued one and so on. Values in between would also be possible, but are not useful in our case.

- It can be converted to a simple 8-bit value by shifting the relevant bits to the front and masking off the others.

- If it is assigned to, it first sets the relevant bits to 0, thereby erasing whatever was stored before, shifts the replacement into position and uses bitwise or to insert it.

Shifting bits in an unsigned value is clearly defined to be equivalent to multiplying with 2 raised to the power of the shift amount (modulo 2number_of_result_bits). It is as such machine independent and guaranteed to give the same and desired result on any platform it is compiled for. All our problems are solved.

Well… the most astute and well-read of my readers are likely to interrupt at this point and call me out for some minor hypocrisy. When presenting the union solution I held it to the highest standard and demanded compliance, but my own solution is not guaranteed to work either. The fixed-width integer types are optional, an implementation may chose to simply not provide them at all. I still maintain that it is better. If it compiles we can be absolutely sure it works as advertised, if it cannot work, it will fail to compile. I’d take that over undefined or unspecified behavior any day.

Alright, with that out of the way, how do I actually use this? Do I keep additional members in my state class for all the partial registers? I could do that, but it has some downsides:

- It makes programmatically selecting a register at runtime a little annoying (basically using a big switch and there are really enough of those in an emulator xD)

- It kind of wastes memory (a really, really insignificant amount in the grand scheme of things and if this were the only reason it would clearly be premature optimization without any benefit. But why pessimize unnecessarily?)

- It makes copying or storing and restoring the state more difficult and error prone. This one is really problematic. As the state would contain references to part of itself, simply copying it normally does not work or might allow the copy to modify the original. Even worse, those references could be left dangling and - once again - throw us right back into undefined behavior land.

As such, I store the 16-bit registers as an array and create the partial proxies on the fly whenever required. But wait, isn’t that ugly? Will I have to write registers_[0] to get at A and lose all the beautiful mnemonics? Not really, that is what enums are for:

enum register16

{

BC, DE, HL, AF, SP, PC

};Each enumerator can now be used to index into our array. state[registers16::AF] for instance would provide us with the 4th entry. But wait, isn’t that in itself ugly and dangerous? Doesn’t it pollute the global namespace and allow far more operations than we actually want? Of course, that is what strongly typed enums are for:

enum class register16

{

BC, DE, HL, AF, SP, PC

};But wait, doesn’t that prevent our original use-case? Without the implicit conversion to int we can’t use the enumerators to index into an array now, can we? That is correct. Enter: the worlds simplest container that is not a plain array:

template <typename T, typename enum_type, std::size_t number_of_values>

class enum_map

{

public:

using underlying=std::underlying_type_t<enum_type>;

constexpr auto& operator[](enum_type v) noexcept

{

return data[static_cast<underlying>(v)];

}

constexpr const auto& operator[](enum_type v) const noexcept

{

return data[static_cast<underlying>(v)];

}

constexpr auto size() const { return number_of_values; }

private:

std::array<T,number_of_values> data{};

};Again, the real code contains some more members to be a proper container, but it’s unimportant to get the idea. All it does is forward operations to an underlying std::array, explicitly casting away the enum where required. We are back to what we had with a plain array and plain unscoped enums, with some additional safety, as it can only be accessed by valid enum values. One could still work around this of course, yet trying to prevent it is no longer fighting against Murphy, but Machiavelli.20

Great. Combining those parts nets us a working and at least somewhat elegant solution, but at what cost? It seems like quite a lot of machinery and abstraction just to get around issues likely never to appear on any system this emulator is ever going to be compiled for. Is it worth it? Is it expensive?

I planned to present a small benchmark here to prove my point, but it turns out I can do one better than that. Have look at this simple test:21

//[...]

class simple_cpustate_enum_proxy

{

public:

constexpr std::uint16_t& operator[](register16 id) noexcept

{

return registers_[id];

}

constexpr byte_proxy operator[](register8 id) noexcept

{

const auto idx=static_cast<::std::underlying_type_t<register8>>(id);

return {registers_[register16{idx/2}],(idx%2)*8u};

}

private:

enum_map_with_num<std::uint16_t, register16> registers_;

};

union register_union

{

std::uint16_t combined; std::uint8_t parts[2];

};

struct simple_cpustate_union

{

register_union BC, DE, HL, AF, SP, PC;

};

simple_cpustate_enum_proxy magic_enum();

simple_cpustate_union magic_union();

int test_enum()

{

auto state=magic_enum();

return state[register8::A]+state[register16::SP];

}

int test_union()

{

auto state=magic_union();

return state.AF.parts[1]+state.SP.combined;

}

//[...]

In it I compare and contrast two simplified versions of the cpu state. One - simple_cpustate_enum_proxy - using the constructs described above and another one - simple_cpustate_union - using a simple union. I introduce, without defining, two functions to conjure up instances of those types. This is done to prevent the compiler from optimizing everything away. As it cannot “see through” those calls it has to actually generate code to extract the values we are interested in. If you have a look at the compiler explorer link, you will however discover it manages to see through everything else I have done. The assembly generated for both cases is completely identical. Compilers really are amazing!

Yes, there are no zero cost abstractions, but the cost here is purely on the developer side and its a cost payed for a clear benefit of well defined and clear code. The issues one had to think about when constructing it were issues that ought to be thought about anyway and the resulting constructs are more generally useful. I consider this a net win.

Outlook

Let’s recap: I’ve argued that emulation is important, developing an emulator is fun and educational and the Game Boy is a great device to get started. I briefly explained why and how emulation works and elaborated on a small, but important, implementation detail. I believe this is enough for one article. In my next post on the topic, I shall dive deeper into the exact workings of the CPU, how to implement the various supported instructions and how to efficiently integrate all of that into a larger, exchangeable and as such easily testable system. It will be more technical and I promise to cut down on the long introductions.

Thank you very much for reading. I am eagerly awaiting your constructive feedback, destructive feedback, insults and threats of violence.

-

whom am I kidding here? It’s not like I have “many readers” yet xD ↩

-

Feel free to skip this section or any of the following if all the generic talk and ruminations about motivations are not to your liking. Until I get started with “How?” none of the sections really depend on previous ones. ↩

-

In some respects it is even more powerful, as it is defined to have an infinitely long tape(i.e. infinite memory). Modern machines using finite memory can only be in a limited amount of states and could as such even be modeled as a “simple” Finite State Machine ↩

-

In Sonys case it is simply a well known open source emulator, which is kind of interesting, given their controversial history with third-party emulator development. ↩

-

it was before my time, but I would love to own one. ↩

-

It is also fascinating to note that Linux was originally created because Linus Torvalds was unhappy with what Minix provided and decided to write a terminal emulator to connect to his universities computer. I’d recommend a reading of his autobiography, it is quite interesting. ↩

-

I have some unnatural and unhealthy attachment to all my devices, even to the point of naming them. Whenever any of them breaks down beyond repair I run through the usual stages of grief but somehow - just as in real life - I never quite manage to get to the acceptance part. ↩

-

Assuming I judge your reading speed correctly. If not, I apologize. ↩

-

of awk and C and unix and… fame. Really, if you don’t know who he is read up on him and all the phenomenal work he produced. Computer science and computing would not be the same without him. ↩

-

I really don’t mean to be offensive here. I have no problem with living under a rock. Live wherever you feel comfortable. I can quite understand a desire to get away from humanities mad inhuman noise. ↩

-

Why not start with something even more simple then. Why not choose the ubiquitously mentioned Chip8 for instance? Well, the truth is, I did. A few years ago on a particularly uneventful weekend that was my first emulator. If you feel overwhelmed by complexity I suggest taking a look at it. With its 35 opcodes and very simple display it can really help getting started and developing a general feel for how to structure an emulator. ↩

-

Technically, that phrasing is wrong of course, as “we” don’t “want to do” anything really and I describe what it is I already did. Nonetheless, to simply my writing and make it more directly relevant to you, I shall, from this point on, simply assume you wish to do something similar to what I did. ↩

-

That is very similar to a rule in the C++ Standard. The as-if rule is essentially what allows the compiler to optimize the horrendous code we present it with. It is allowed to transform what we have written in absolutely any way, provided we cannot observe it and the perceived result is the same, except for performance. In the case of emulators, of course, performance is correctness and can be observed. ↩

-

A short time ago, Matt Godbolt(of Compiler Explorer fame) and Jason Turner(best known for C++ Weekly and CppCast), both of which are far more knowledgeable and much better versed in all this than I could hope to be, did an interesting livestream where they discussed this(among a lot of other things). They also showcased this awesome project. ↩

-

Those who know me and those who skimmed over the tags assigned to this post might have guessed already ;-) ↩

-

This source of hard to predict errors is the reason unions are usually paired with a tag/discriminator to remember which part is currently active. Even that is still quite error prone as it relies on programmer discipline to check the tag and act accordingly, which is why safer alternatives, like std::variant, exist. ↩

-

There are great tools out there to help you catch such code before the damage is done. ↩

-

It should not make too much of a difference, but blanket statements like that without any evidence are rarely a good idea. It would have been better to do some measurements or at least count which kind of access is more frequent. ↩

-

This is a common expression used to describe that C++ does not try to fight intentional, malicious attempts to subvert its rules, only accidental ones. ↩

-

The part displayed here is only an excerpt, you can click on the description to download the complete file or on the compiler explorer link to view it on there. ↩

Comments powered byTalkyard.