A plea for order

I know I promised to talk more about emulation, about chess and operating systems, but I have to get something off of my chest first. This is not going to be one of my would-be grand and elongated epics trying to cover far too much in far too many words. Instead, I shall focus on one very small, very self contained thing: the order of #include directives in C++ source code1

If you know me or happen to have read my about page or the beautifully self-centered explanation of why this blog exists, you might know that I tend to think what is great for me is also beneficial for others. As such, I force strongly encourage my friends to regularly engage in recreational programming. A few days ago, one particularly ambitious victim student of mine asked for assistance in tracking down a bug. Upon perusing the file sent to me, I was treated to this gem of questionable beauty:

#include <sys/types.h>

#include <sys/socket.h>

#include <netinet/in.h>

#include <arpa/inet.h>

#include <unistd.h>

#include <iostream>

#include <fstream>

#include <array>

#include <vector>

#include <thread>

#include <atomic>

#include <mutex>

#include <queue>

#include <chrono>

#include <ctime>

#include <unistd.h>

#include "mySocket.hpp"

#include "user.hpp"

#include "messages_tools.hpp"

//[...]The real code was alright and the bug source located easily enough, but this minor abomination painfully reminded me of an irksome issue too rarely addressed.

It is not an isolated incident either, but rather somewhat of a pet peeve of mine I am confronted with quite frequently. Here is an excerpt of some code I had to work on as part of my last project as a TA at university:

#include <cmath>

#include <assert.h>

#include <chrono>

#include <fstream>

#include <chrono>

#include <math.h>

#include <algorithm>

#include <scai/dmemo/Distribution.hpp>

#include <scai/dmemo/HaloExchangePlan.hpp>

#include <scai/dmemo/Distribution.hpp>

#include <scai/dmemo/BlockDistribution.hpp>

#include <scai/dmemo/GenBlockDistribution.hpp>

#include "PrioQueue.h"

#include "MultiLevel.h"

#include "Settings.h"

//[...]Having read this far2, you might be wondering, what it is I am so agitated about? Where and what exactly is my problem? Is there a bug in this simple list of includes? Can there be?

Well, the fact that you are even considering the option kind of makes my point for me. Nonetheless, the answer is no. There is no clear “bug” here. Both examples compiled fine and showed no problems directly related to the fragments shown. What I am so concerned about is largely stylistic, cosmetic even. It is not about correctness. Not immediately so.

In case I wasn’t exactly clear about it, allow me to spell it out explicitly. I am thoroughly annoyed by the lack of any discernible structure. The order the directives appear in is a jumbled mess so incoherent to leave any viewer confused as to what is actually used by the code and at a complete loss when trying to determine if a required header for potentially new additions is already included. The authors themselves fell prey to that already: My friend included unistd.h twice, whilst the university code contains a duplicate directive for chrono and conflicting variations of math.h and cmath.

I think we can all agree that the situation described above could be improved. The two pressing questions remaining are: should it be and if so, how exactly can we go about doing so? Is it worth whatever minuscule amount of extra effort some order would require? Judging by the title of this article and the ever so slightly strong language used to express my grievance you might have guessed my personal opinion on the matter, but even I admit there are at least two reasonable objections:

-

Why are you even talking about #include in 2020? This is the decade of modules and modules instantaneously solve all problems simple headers ever caused.

-

Stop being such a petty killjoy! What does it matter if some files are included twice? Just let every developer add the headers they need once a compiler complains, be done with it and spend your precious time on the real problems, writing real code, not insignificant include directives…

At least they seem reasonable on a surface level. Please allow me to dismantle and destroy them.3

Modules can’t save us

Let’s address modules first. Modules are one of the 4 really big and greatly anticipated features we finally got with the new C++20 standard.4 They promise to at long last get us a little bit further out of the macro infested mess C originally got us in. Don’t get me wrong, I love the C programming language. Its simplicity, elegance and practicality are unmatched, and were even more so at the time of its inception. In Stroustrups grand taxonomy of programming languages of those everyone complains about and those no one uses, C clearly falls within the former category.5 Nonetheless, existing and striving for backwards compatibility for so long - the famous K&R book was published in 78, 42 years ago - is bound to accrue some technical debt.

I believe its somewhat simplistic model of separate compilation and the dumb, mindless textural substitution used to stitch together translation units from various source and header files can be considered such debt and its about time we payed up and moved on. It was perfectly sufficient at the time, but in modern environments and at modern scale, we can and should be doing better. The high level design goals of modules include better isolation, better encapsulation and better interfaces and I’d like to think the design converged on after more than 15 years managed to achieve them. I wont be going into any detail how they work and can be used for three simple reasons: It is not exactly on topic, as is usual, others have done an amazing job explaining this already, both in writing and in great talks, and my own knowledge and experience is cursory at best.

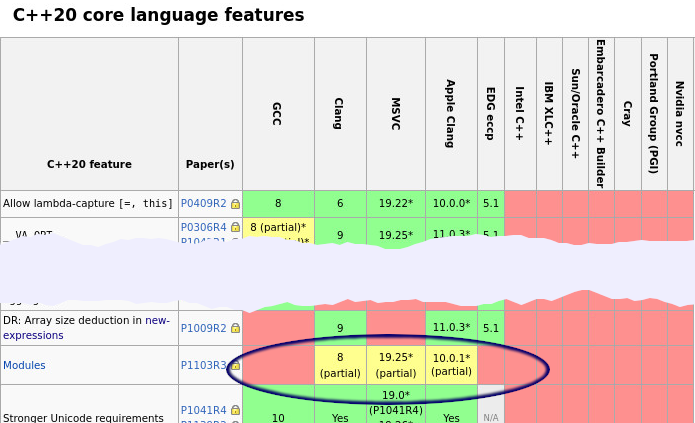

I further believe I am not entirely alone in not being an expert on the topic. The design was somewhat contentious and not entirely insignificant changes were made in the later phases of its standardization. As such, experimentation and general adoption is hindered by one more minor issue. Have a look at this great overview of compiler and library support for all the myriad C++ features of newer standard revisions. At the time of writing it contains the following relevant column:

Apparently none of the big three compilers - gcc, clang and msvc - ship with complete modules support yet. The speed of adoption has significantly grown since C++11, but even with near perfect implementations, newer standards are not as prevalent as some fanatics like me would like them to be. Judging by some interesting survey results it does not seem to be an exaggeration to claim that approximately half of all C++ developers do not even use C++17 yet. Baselessly extrapolating from that data, it might well be another 5 - 10 years until a majority of programmers and companies use some C++20 in all of their codebases. It will most certainly take even longer to completely transition away from headers and transform or replace all legacy code. And even if this happens - which is somewhat doubtful - we are still left with C compatibility. Their importance may be reduced significantly, but headers are here to stay.

So no, whilst modules are generally great, solve many a problem and were desperately needed, they do not save us here. All of my concerns will remain valid for some time to come and some - like readability and clarity of intention - would matter even in a hypothetical and really really far away modules-only world.

Style matters

In the meantime, we have to deal with includes and might as well do it with some structure. Or should we not care, do just what we absolutely must and be done with it? This second objection I promised to address can actually be construed as two different views of differing extremes: Should we not care about style in general or is this one specific instance just too insignificant? The first is trivially easy to debunk, the second one might prove a little more involved. As such, lets jump right in so I won’t lose your precious attention before my actual call to action.

There is this famous saying in programming circles, that one should always write code as if the person who has to maintain it in the future is a violent psychopath who knows where you live. Considering I tend to maintain my own code, at least one part is true and my sense of orientation is notoriously lacking.

Yet, why should we fear this person? Which properties in our code could trigger their rage and for what possible reasons? Well, another adage that complements the previous perfectly states the important fact, that code is far more frequently read than written. If you don’t finish your project in one sitting - and its a rare one that can be finished in so short a time - or if you happen to collaborate with someone else, whatever you wrote has to be parsed and understood, its intentions, methodology and implications gleaned as quick and frictionless as possible. You don’t just write for a compiler, you write for humans.

This can’t be stressed enough. We tend to eschew goto, for example, not because it itself is fundamentally flawed, but because we are. It inhibits our ability to reason about control flow, to be certain about which pieces of code are executed in which sequence and which might be skipped entirely.6 The compiler has no such issue. It doesn’t care. We do.

In the same vein, we preach to carefully choose self-explanatory identifiers not because it is necessary, but because it aids readability and serves as a design aid. When we can’t clearly name a thing, we might have to rethink what and if it should be. Again: The compiler doesn’t care, it might even throw away the result of our hard work. Yet, I challenge you to try and guess what the following function does and should do:

int z(int _){return _<2?1:(z(_-1)+z(_-2));}I trust all of my dear readers can figure that one out eventually. Nonetheless, you have to admit it was not exactly self evident and you had to actively engage in dissecting and thinking about it. Contrast that experience with the following, absolutely identical fragment:

int fibonacci(int n)

{

if(n<2) return 1;

return fibonacci(n-1)+fibonacci(n-2);

}At but one glance, without even reading the second line, you are aware of what it should do. As the intent is clear, subsequently your attention will not be spent on guessing and mechanics, but checking how the task is accomplished. With a tiny bit of domain knowledge, you might even spot the error. Or know a multitude of better solutions.

And that, my friends, is the crux of the matter. Lack of stylistic concern is not itself a bug, but the cause of many. Style is never about just being - for some nebulous definition - pleasant to look at, but about being clear, reducing cognitive load and allowing the reader to focus on what is essential, making every character count. Code is written for humans and humans have limitations. Taking this into account leads to clear, readable expression of ideas. Neglecting it leads to misunderstandings and confusion. Good style fosters elegant, correct and efficient code. Bad style facilitates errors. Pasta is tasty, but I much prefer penne to spaghetti.

#include matters7

The generalized argument is quite obvious and I believe few would dare to disagree. I might, however, be in the minority in caring so deeply about this one specific issue. As a community, we routinely teach the importance of naming, worry about whether private or public parts should appear first in a class declaration and debate the merits of east const and the correct one, yet when it comes to include directives, order is often sorely neglected.

Whilst some articles about the topic seem to have been written over the years, those are few and far between. The newest I could find is from 2017 and none of them explain their motivation and reasoning in as much excessive detail as I’d deem adequate.8

The wonderful and official C++ Core Guidelines have some rules for how to deal with header files and set some goals to strive after I completely agree with, but readability and ease of getting an overview of what is or isn’t used are notably absent. They even contain - at the time of writing - the following example as a good one:

#include <vector>

#include <algorithm>

#include <string>

// ... my code here ...Granted, the point illustrated here is a different one, but what thought process has gone into including vector before algorithm before string? I suspect none at all. With those three and in a simple self contained example that is perfectly fine, but just as C++ developers as a group seem to have converged on using pre-increment whenever there is no reason to use the somewhat more powerful post-increment and consider it premature pessimization to do otherwise, I believe not structuring includes from the get go should be considered a premature pessimization for readability. It really doesn’t take much effort and prevents problems down the line.

I absolutely mean what I wrote there, it can cause real problems. As stated in the previous section when advocating for style in general, everything that might confuse is already detrimental, but it is more serious than that. The problem here lies not simply in what is, but what could be and the fear and uncertainty this instills in collaborators.9 It is not entirely unheard of that some libraries require a certain order. Reasons can vary.

Some - like the linked - simply have to deal with a configuration macro infested mess.

Sometimes and even more insidious, the meaning of code can subtly and silently change based on what is declared first. Have a look at this simplified example:

#include <iostream>

void gun(double)

{

std::cout<<"double\n";

}

void gun(int)

{

std::cout<<"int\n";

}

void fun()

{

gun(42);

}

int main(int argc, char* argv[])

{

fun();

return 0;

}

Let’s ignore for a moment that I exemplified precisely the horrid naming I criticized before and analyze what exactly is happening here. In main we simply call a function appropriately named fun. This in turn calls a function even more appropriately named gun, passes the value 42 and this is where things get interesting. Name lookup is performed to get a set of candidate functions, which yields - in our case - the two variations of gun, one expecting a double, the other one an int. After this, overload resolution kicks in, selects the int version as a better candidate and our program dutifully prints “int”. Pretty simple, right?10 Too simple even, so let’s switch things up a little:

#include <iostream>

void gun(double)

{

std::cout<<"double\n";

}

void fun()

{

gun(42);

}

void gun(int)

{

std::cout<<"int\n";

}

int main(int argc, char* argv[])

{

fun();

return 0;

}

All I did was swap the positions of fun and the second version of gun. Which is exactly the same as would happen if both were defined inline inside different header files and we swapped the include order. This might seem harmless, but the meaning of our program just changed. When executed, it will - somewhat surprisingly - output “double”. When collecting candidate functions for firing the gun, the second, better fitting version is not yet known and due to dreaded implicit conversions no problem is detected with using the first.

I admit, this concrete example is unlikely to occur in practice. Nonetheless, it showcases a type of problem that can realistically happen and causes me to feel queasy whenever I see an ordering I fail to understand. Just as you, dear reader, when I suggested in strong words that there might be a problem in the introductory examples, I am left to guess the authors intentions. Was there willful negligence or a brilliant scheme beyond my comprehension?

The most frequent “errors” I observed, however, are simple hidden dependencies which just so happen to be satisfied transitively. Once more, allow me to demonstrate:

A.hpp:

#ifndef A_H

#define A_H

#include <string>

const char* meaningless();

#endifA.cpp:

#include "A.hpp"

const std::string str="Hi";

const char* meaningless()

{

return str.c_str();

}B.hpp:

#ifndef B_H

#define B_H

std::string example();

#endifB.cpp:

#include "A.hpp"

#include "B.hpp"

std::string example()

{

return meaningless()+std::string{" dear reader!"};

}Again, not the most realistic of examples, but brief and illustrative of a larger issue. What happens if, say, the author of A realizes the ridiculousness of the meaningless implementation and changes it to no longer unnecessarily construct a std::string:

#include "A.hpp"

const char* meaningless()

{

return "Hi";

}Diligently, the header is also changed to no longer include <string>.

B breaks!

B.cpp will now fail to compile not because anything it did changed, not because anything it directly used changed, but because one of its dependencies changed its implementation. Not its interface, just the implementation. Needless to say, this is very bad. Among all the dangers listed above, it is luckily also the one most easily avoided. Whilst we cannot guarantee no third party code ever falls into this trap, some simple rules can reduce the risk of suffering from it and prevent us from imposing such burden on our own users. To understand how, let’s first reiterate what exactly went wrong:

- The author of A exposed an implementation detail - its use of std::string - in the corresponding header file.

- The author of B fell into Hyrum’s Law and unwittingly relied on this private detail.

Both parties could have done better.

- The author of A could have prevented the issue appearing at all by being explicit and distinguishing between which dependencies are required to use the provided functionality and which are simply implementation details and might change. As such, the include directive should have been in the A.cpp file, not the header.

- The author of B could have detected the issue earlier by including its own header first. This would have triggered a compiler error, as B.hpp is not self-sufficient and does not include everything it uses. Subsequently changing B.hpp to ensure B.cpp compiles breaks the implicit dependency.

In conclusion: I think I have shown that even if there were no technical downsides at all, a lack of order does still cause some cognitive overhead. It either gives your reader something to think about that might be of no consequence and simply distracting or it encourages them to completely skip over a section of your file, causing you to convey less useful information than you could have. You squandered an opportunity to be explicit about what your dependencies are, obscured your intent and failed to ensure that whoever works with your files next can feel confident in any modifications and additions.

So what?

With all this I hopefully managed to convince you that some rudimentary rules can be beneficial. Which is, once again, where we arrive at an impasse. Just as with project organization in general - so there have recently been some commendable and valiant efforts - there is no one, official, all encompassing standard way to handle this ordering. Regardless, we don’t have to be entirely subjective and can be informed by the concerns addressed above. As such, I would like to outline which guidelines I have adopted in my own personal projects as well as the underlying reasoning, in the hope that proves of some use and inspiration for my dear readers.

In order to identify what is good or bad and construct a generally useful and coherent ruleset we must first agree on some goals to strife after. On a high level, I trust the following two will not be controversial:

- Minimize errors

- Maximize clarity

From the possible problems described combined with these criteria, we can deduce the following instructions:

- Be self sufficient, include everything you rely on.

- Be minimal, include only what is required.

- In a cpp file, first include the corresponding header.

- After this, organize headers in interrelated groups - based on projects or libraries - and list those in reverse order of potential dependencies. E.g. first list everything belonging to the same project, followed by other libraries used, followed by almost-standard libraries like boost, followed by the C++ standard library, followed by the C standard library.

- Order the group members themselves alphabetically.

I’d be remiss to omit, that whilst I do like to think I came up with those rules on my own, they turn out to be not entirely original. Far more experienced and knowledgeable people seem to have arrived at pretty much the same set. I don’t think that is a downside but rather consider it a confirmation of my reasoning. The result appears to follow logically from the stated criteria.

I already kind of touched on it in the previous section, but the one possible error we can deal with is hidden dependencies. Rules 0 and 1 should help us eliminate them and rules 2 and 3 serve as a sort of sanity check to ensure we detect violations quickly.

To maximize clarity, we want to enable a human to quickly discover what is needed and glean as much useful information as possible with a cursory glance. Rule 0 forces us to be explicit about our dependencies instead of relying on implicit support and expectations. Rule 1 guarantees that whatever is mentioned is relevant. The grouping allows a quick scan to reveal which larger sets of libraries are depended on and the alphabetical order helps to quickly determine the presence or absence of any particular include.

A plea

With this, I shall finally come to an end and fear I might owe you an apology. I promised this would be short and as the more perceptive of you might have noticed I failed to keep that promise. Let’s just claim I lacked the time, thank you for sticking with me.

I do hope it has been useful. As I repeatedly elaborated on those points in excruciating detail in private conversation and was at one point so annoyed by the frequent inconsistencies to force it on my university students via an exercise11, I believed my thoughts might be more generally interesting. At least I now have an article to point to whenever it does come up. I am very much interested in your thoughts. Am I misguided and obsessive? Am I just plain wrong? Feel free to enlighten me in the comments below!

In closing, allow me to implore you one last time: We might not agree on which exact order to follow, but I hope you now concur some is needed. So please, I beg of you: Adopt “my” rules. Adopt some other rules. I really don’t care about which, just adopt some.12

If you say in the first chapter that there is a rifle hanging on the wall, in the second or third chapter it absolutely must go off.. If you include a file, it better have a purpose for being where it is.

-

Conveniently, this is also a much easier article to write. And a bit of an unbridled rant, so please excuse some foul language ;-) ↩

-

thank you for that. ↩

-

Of course, what I am doing here is not exactly fair. All I do is construct a strawman to beat it up and tear it back down again. That is for the very simple reason that I am not aware of any better arguments against my position. If you do know any and are convinced I am delusional, I would be most grateful to be enlightened by your wisdom. Feel free to use the comments below or contact me via email. ↩

-

The others, in order of my personal preference, are: concepts, coroutines and ranges. Here is a great talk quickly illustrating what else C++20 has to offer. ↩

-

So I personally see few reasons to choose it over C++, except maybe availability and simplicity of implementation. ↩

-

I’m not trying to unduly diss goto here. There are valid reasons for its use. It is also pretty interesting what is defined in terms of it. ↩

-

It is absolutely not the topic of this post, but considering I used this headline, I feel compelled to mention that another thing that matters is, of course, diversity and inclusion. Have a look at the #include<c++> community. ↩

-

Depending on how condensed and pithy you prefer to consume technical opinions, that might of course be a good thing and better suited for you than my own writing. Additionally, I might have missed some articles. If so, feel free to point that out in the comments and I will gladly read them, give proper credit where it is due and link them here. ↩

-

which could very well be future versions of yourself. ↩

-

This is simplified of course and the way I described the order things happen in could be misleading. Of course, the decision which gun to call happens at compile time and not after fun is called at runtime. ↩

-

Well, it wasn’t exactly forced. It was an optional exercise for additional points in which I requested them to create a clang based tool enforcing the ordering, which was nothing more than a thinly veiled attempt to prompt them to at least think about the matter. No one took me up on it, but it was end of term and exams were coming up, so I can’t begrudge them that. ↩

-

That is, of course, a lie. I want you to follow my example. To cite the great Walter E. Brown: I know that all these opinions are not yet shared by all programmers - but they should be ↩

Comments powered byTalkyard.