Advent of Code 2020

My most valued readers! So glad you could drop by again! You are just in time to catch me singing the praises - almost as belated as they are deserved - for an annual coding competition that can be classified as the highlight of many a programmer each year. In addition, I also show and explain all my solutions for it.

In recent years, I have found myself increasingly looking forward to December. Not because of Christmas, not because of my birthday1, not even because the best thing in each successive one seems to be it finally coming to a close - but because of Advent of Code.

In line with my more general resolution of being more open and public about everything I do2, I decided I should note down my thoughts, document my experiences and publish my solutions this time around.

Keeping in line with my general tendencies, however, I am - of course - incredibly late to the party.3 Advent ended far more than 4 month ago and I only managed to complete this writeup now, not even in time to ruin the easter bunnys day4.

Nonetheless, despite their seasonal framing, the problems posed are timeless, highly educational and results can be submitted even years after the original “end”. As such, I am hopeful my musings on this particular incarnation - though late - might still prove helpful, interesting and enjoyable to some of my dear readers.

One last word of warning before we get to it:

I am aware, I do tend to say that Every. Single. Time., but this article really is structurally very different from all that came before it. Even if you enjoyed those, it might not come as a surprise if you don’t enjoy this one5.

I wrote a small introduction and a tiny conclusion tying it all together, but really, everything else was written rather independently. Therefore, this should maybe be viewed more like a collection of very small articles or commentaries - one for each day - instead of one grand, cohesive whole. You can freely jump around, look at only the explanations for a single day or even skip all code completely and just peruse my thoughts and opinions at the beginning and end.

What is this and why should I care?

I really don’t think I can put it better than the creator himself, so allow me to first point out that Eric Wastl has presented many times on the topic and watching at least one of those presentations is probably a more valuable investment of your time and attention.

If, however, you intend to stay with me for now and want the shortened, succinct version - also ripped directly from his talks - Advent of Code is what you get if you combine this6:

with something like these:

Beginning on the first of December, each day leading up to Christmas you get another door to open and peer behind. Instead of discovering a piece of chocolate - as is usual in more common variations of an advent calendar - each door reveals a brand new programming puzzle to solve, all of them flimsily tied together with a whimsical and endearing cover story.

Whilst this description alone might, to the uninitiated, sound nice but not particularly exciting, it being the only thing during the last 5 to 10 years that managed to motivate me to consistently wake up and be available at a specific time of day - let alone really early - should tell you there is something very special about it.

And indeed, there is much that differentiates it from other events and makes participating in Advent of Code a rewarding and unique experience:

-

It is not tied to any specific tool, language or environment. In fact, special care is taken to keep the problems language agnostic and not give any one - compiled or otherwise - a significant edge over others7. As you have to submit a solution, not the code which computes it, anything goes and you are free to chose whichever path you fancy. You can preprocess input, combine multiple tools, solve some puzzles with pen and paper, crowdsource or use whatever other crazy ideas you might come up with. Anything short of hacking the site is welcome and kind of the point. It really teaches you to solve the specific problem in front of you, just as you should do in this strange “real world” I have been hearing so much of.

-

Its one of a kind two part structure not only increases accessibility but lends itself particularly well as a teaching tool. You see, you do not really get one puzzle each day, you get two, the second of which is unlocked after you successfully solved the first one! Most of the time it is a more complicated variation or an extension of the first part. The effect of this structure, as I see it, is twofold: On the one hand, even complete beginners do get at least one task each day they can realistically hope to solve. On the other hand, what you have learned in part 1 can and must immediately be applied in part 2, consolidating new knowledge and fostering true understanding. You have to rethink and optimize your approach, consider asymptotic complexity and benefit from keeping your code clean and extensible.

-

The occasional interdependence of many tasks, as seen with the notorious intcode problems in 2019, serves as a wonderful incentive to not only keep code extensible from the start, but even regularly go back to maintain and clean it up. You never quite know if and when you might have to build on something written on a previous day and not considering that might come back to bite you. Again, reminiscent of and not entirely unlike this “real world” thing.

That list is not exhaustive and deeply personal, which is why I will stop now. There is, however, one aspect so important to deserve its own section in this introduction: What really sets Advent of Code apart - more than anything - from all other coding competitions I know of, is its amazing community.

Community

With more than 150000 participants vying for one of the coveted spots on a global leaderboard consisting of a very meager 100 places, one could expect a certain degree of hostility.

What is actually happening is, however, the very opposite. Seldom have I witnessed such incredible supportiveness in so competitive an environment. Everyone helps and encourages everyone else, providing tips and assistance when needed, comparing solutions, sharing thoughts and ideas and just in general being awesome to each other.

To name just one example, I invite you to scroll through this lovely reddit thread, started by the creator himself after, on the very first day of this most recent edition, the servers collapsed under the unpredictably huge load on puzzle unlock. Who got onto the leaderboard on that day was more of a function of who got to the servers first upon their restart than who solved the problem most quickly. As such, no leaderboard points were given at all. Instead of disgruntled voices protesting the decision or complaints about the crash, you’ll find only words of encouragement and understanding.

That incident was not just a fluke either. Solving the puzzles simultaneously with thousands of others around the world, all with different backgrounds, preferences and varying levels of experience and skill provides ample opportunity to learn from and inspire each other. There are even special rewards for exceptionally creative solutions, visualizations and other ideas. And memes. A lot of memes.

The community is, of course, not restricted to reddit exclusively. Many participants - all across the leaderboard - stream their solving live on platforms like twitch and youtube, searching github for “adventofcode” reveals more than 30000 repositories and various sub-communities maintain their own leaderboards.

Related blog posts also exist in abundance. Even if I most definitely won the race for most delayed wrap-up post, it is by far less of an excessive margin than you might think. In no particular order of timing and preference, as I love them all, here is a small selection of wrap-up posts. There are many more and I encourage you to search for them.

Regardless, I promised to keep this short. Enough preamble, let’s leave the gushing for another day and move on to finally discuss solutions.

Solutions

Setup and tooling

If you read my remarks above attentively, you will know that choosing which set of languages and tools to employ is a pivotal decision. Allow me to briefly explain my sophisticated thought process on that matter:

If all you have is a hammer, everything looks like a nail. If your favorite tool is a very efficient hammer, you can beat everything into submission until it wishes it were one.

Naturally, I exclusively used C++.

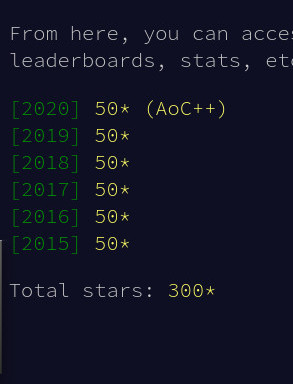

I am a lot of things, and one of them is ambitious in all the wrong ways. Last year, I made it onto the global leaderboard just once and I intended to improve on that.8 To improve my chances of doing so, I did the bare minimum of preparation and composed a few simple scripts automating repetitive, time consuming site interactions. Nothing fancy, just one for submission, one for fetching the input, copying and opening a boilerplate ready base file for each day and a very simplistic Makefile tying them together.

Disclaimer

All explanations that follow are also reproduced in a github repository, next to the code they pertain to. So if you are only interested in a solution for one specific day, it might be better to just head over there and browse to the corresponding folder.

They also - obviously - spoil the fun of solving the problems on your own. So, if you happen to know me personally and your name starts with H, J, K, M, R or S or if you simply wish to - as opposed to being forced to - do the puzzles on your own first, skip what is written below and return to compare once you are ready ;-) You may consider me sorely disappointed if you look at the solutions before your own attempts.

WHAT FOLLOWS DOES CERTAINLY NOT CONTAIN THE BEST CODE I HAVE EVER WRITTEN. I solved all of them at the time they appeared, which was 6 am in the morning where I live, between 8 - 12 hours ahead of my normal waking time xD

Day 1

Part 1

The first puzzle was simply finding two elements in a list of numbers which sum to another number(in our case the then current year, 2020).

This can, of course, be solved trivially in quadratic time, as can be seen in 01_nested_loop.cpp:

for(std::size_t i=0;i<vals.size();++i)

{

for(std::size_t j=i+1;j<vals.size();++j)

{

if(vals[i]+vals[j]==2020)

{

//found it!

}

}

}If we want to get fancy, we can sort our input in O(n log n) time, iterate over it and find the potentially corresponding number in O(log n) time, yielding a total running time of O(n log n) again:

std::sort(std::begin(vals),std::end(vals));

for(auto v:vals)

{

if(std::binary_search(std::begin(vals),std::end(vals),2020-v))

{

//found it!

}

}Yes, binary_search in the standard library returns a boolean. This is one of the rare occasions where that is actually exactly what we want. For all the others, there is lower_bound and upper_bound.

That is rather nice, but if we are willing to sacrifice just a tiny little bit of additional memory(O(m) with m being the searched for sum) and assume all numbers are non-negative we could even go linear:

std::bitset<2021> seen{};

for(auto v: vals)

{

if(v>2020) continue;

seen.set(v);

if(seen.test(2020-v))

{

//found it!

}

}Without the assumption of non-negativity - which held for my input, but might not for everyones - we can still do a variation of this, but would have to use significantly more memory and/or rely on some sort of hashset.

Part 2

For a tiny bit more difficulty (and were the numbers bigger also a significant spike in runtime for the trivial solutions), the second part asks us to find three numbers that sum to 2020. Luckily for us, the list is reasonably short and even the now cubic nested for loops produce the correct result in a very short time(a very small fraction of a second on even my old machine):

for(std::size_t i=0;i<vals.size();++i)

{

for(std::size_t j=i+1;j<vals.size();++j)

{

for(std::size_t k=j+1;k<vals.size();++k)

{

if(vals[i]+vals[j]+vals[k]==2020)

{

//found it!

}

}

}

}Both of our slightly more sophisticated methods for part 1 can relatively easily be adapted to this new constraint. For the one applying sorting, simply add yet another for loop, yielding a O(n^2 log(n)) runtime. Nothing to write home about, but a step up from cubic…

std::sort(std::begin(vals),std::end(vals));

for(std::size_t i=0;i<vals.size();++i)

{

for(std::size_t j=i+1;j<vals.size();++j)

{

const auto target = 2020-vals[i]-vals[j];

if(std::binary_search(std::begin(vals),std::end(vals),target))

{

//found it!

}

}

}Again, we can get rid of that tiny annoying log(n) factor, given the same assumptions and using the same technique as above. Adding another for loop results in the following code, for “only” quadratic time.

std::bitset<2021> seen{};

for(std::size_t i=0;i<vals.size();++i)

{

if(vals[i]>2020) continue;

seen.set(vals[i]);

for(std::size_t j=i+1;j<vals.size();++j)

{

if(vals[j]>2020) continue;

seen.set(vals[j]);

const auto target = 2020-vals[i]-vals[j];

if(target<2021 && target>=0 && seen.test(target))

{

std::cout<<vals[i]*vals[j]*target<<'\n';

return 0;

}

}

}More than sufficient for the small input set, yet I really did not like stopping here.

Whilst it remains an open research problem whether or not the general 3SUM problem is solvable in subquadratic time, we do have some additional constraints. Our input consists of only non-negative integers smaller or equal to our target value. Wikipedia claims that for integers in range -n to n there exists a solution in O(n log n) utilizing a Fast Fourier Transform on the input set represented as a bit vector, but unfortunately I failed to understand how exactly such a solution could be constructed.

So I tried to find a different, better solution, gave it a tiny bit more thought and my contemplations yielded the following code:

std::sort(std::begin(vals),std::end(vals));

std::size_t lower = 0, higher = vals.size()-1;

while(lower<higher)

{

const auto target = 2020-vals[lower]-vals[higher];

if(std::binary_search(std::begin(vals),std::end(vals),target))

{

//found it!

}

else if(vals[lower+1]-vals[lower]<vals[higher]-vals[higher-1])

++lower;

else

--higher;

}The runtime is bounded fairly simple: lower and higher start n values apart from each other and in each iteration either one is moved towards the other or a solution is found. As such, the while loop runs at most n times. The most expensive operation within is the binary search, running in log n time. As such, the overall complexity is O(nlog(n)) (for sorting) + O(nlog(n)) (for the loop), yielding a glorious O(n*log(n)) in total!

That is all fine and dandy, it even worked for my input and the few others I could find and try it on at the time, but that alone is far from sufficient to prove it could work for any input. As such, I tried to sketch a proof and in so doing quickly had to come to a shattering realization: this is completely wrong and ridiculously stupid!

For instance, it fails with the following simple input:

1 9 1009 1010 2000

Nonetheless, I considered this failure educational and working out why it works for some inputs and how exactly it fails so spectacularly on many others can be quite instructive, which is why I still showcase it here.

Day 2

Part 1

The second day was algorithmically far easier(and imho less interesting) than the first one, as the only difficulty here was avoiding typos and parsing the input. You got a list of words and policies, which described how to determine if a given word was valid:

1-3 p: word

Count the occurrences of the given letter(p) in the given word(word) and check it is present between the lower(1) and higher(3) bound times. We then had to count the number of valid words, i.e. words that satisfied their own policy.

Really, this was mostly just a typing speed challenge, with standard algorithms doing the bulk of the work. I simply defined a struct describing one such rule/word pair:

struct policy_word

{

char letter;

int min, max;

std::string str;

};Followed by overloading operator«(lazily, without any error handling), so I could extract properly typed input from stdin:

std::istream& operator>>(std::istream& in, policy_word& p)

{

char dummy;

in>>p.min;

in>>dummy;

in>>p.max;

in>>p.letter;

in>>dummy;

in>>p.str;

return in;

}That allowed me to conveniently combine istream_iterator and std::count_if to solve the actual problem:

std::cout<<std::count_if(std::istream_iterator<policy_word>{std::cin},{},[](auto pw)

{

const auto cnt=std::count(std::begin(pw.str),std::end(pw.str),pw.letter);

return cnt>=pw.min && cnt<=pw.max;

})<<'\n';Part 2

Part 2 was even more trivial, as the interpretation of the policies was changed. Now a valid word has to have the given letter at exactly one of the given positions. Simply change the validity checking lambda and you get the following code:

std::cout<<std::count_if(std::istream_iterator<policy_word>{std::cin},{},[](auto pw)

{

return pw.str[pw.min-1]!=pw.str[pw.max-1] && (pw.str[pw.min-1]==pw.letter || pw.str[pw.max-1]==pw.letter);

})<<'\n';The only potential issue, and obviously one I stumbled upon and which cost me one minute because of an incorrect answer is that position - of course - starts at 1, not 0 here.

Day 3

Part 1

Yeahy, may the ascii art begin! This was the first of a classical kind of 2d grid based puzzles this year! It was also the first problem this year where what little preparation I had done came in handy, having predefined point and vector types.

Given a map consisting of open spaces (represented as ‘.’) and trees(represented as ‘#’), all we had to do was check how often one would hit a tree when going down a certain slope. Beginning in {0,0}, just adding the vector {3,1} until you hit the bottom, count the elements with value #. To ensure you always reach the bottom, the grid extends infinitely to the right, simply looping around in classic pacman fashion - which implemented via a simple % on the x value:

vec2d direction{3,1};

point2d pos{0,0};

int count = 0;

while(pos.y<static_cast<int>(grid.size()))

{

pos.x%=grid[0].size();

if(grid[pos.y][pos.x]=='#')

++count;

pos+=direction;

};Part 2

Part 2 was simply doing the same thing with multiple slopes and multiplying the results. Nothing fancy, just moving the counting to a lambda and a small range-for does the trick:

for(auto d: {vec2d{1,1},vec2d{3,1},vec2d{5,1},vec2d{7,1},vec2d{1,2}})

result*=count_trees(d);Day 4

Part 1

Given a list of key:value pairs, separated by empty lines, we were first asked to simply check for the presence of various fields. Quite similar to the first day, this was mostly an exercise in getting parsing somewhat right, which, frankly, annoyed me a little at the time. As on that day, I first simply defined a type for the data:

struct password_data

{

std::unordered_map<std::string,std::string> data;

};followed by an overloaded operator»:

std::istream& operator>>(std::istream& in, password_data& pwd)

{

pwd.data.clear();

std::string line;

std::stringstream strm;

while(std::getline(in,line) && !line.empty())

{

for(auto c: line)

strm<<(c==':'?' ':c);

strm<<' ';

}

std::string name, data;

while(strm>>name>>data)

pwd.data[name]=data;

if(pwd.data.empty())

in.setstate(std::ios::failbit);

else

in.clear();

return in;

} We then define what it means to be valid:

bool is_valid(const password_data& pwd)

{

std::array<std::string,7> required{"byr","iyr","eyr","hgt","hcl","ecl","pid"};

for(const auto& part: required)

if(pwd.data.find(part)==std::end(pwd.data))

return false;

return true;

}and let count_if do all the work:

std::cout<<std::count_if(std::istream_iterator<password_data>{std::cin},{},is_valid)<<'\n';The different maps were separated by an empty line in between them, which made it rather tedious (and likely a bad idea) to parse as I did by overloading operator» on std::istream. I also - as usual - committed another ridiculously stupid mistake that cost me a good 5 minutes:

Note how I read lines until either std::getline fails or returns an empty line. That is because there are actually two ways a collection of related key-value pairs is terminated: With an empty line OR with the end of input. The problem occurs in the latter case. If std::getline fails, it sets, appropriately, the std::ios::failbit on the underlying stream. If I do not clear it, however, this indicates to the istream_iterator that extraction failed and the last entry will not be accepted.

Part 2

Now, in addition to merely checking for their presence, we were tasked with checking each fields validity based on certain criteria. Again, not too difficult, just quite tedious and easy to get wrong, yielding somewhat unsightly code by necessity. Originally, I had a gigantic if-else block and repeated code, just to get it done as quickly as possible(faaar too slow for the leaderboard, of course), but looking at that code was the sort of torture I could not possibly subject my dear readers to, so I went kind of overboard and constructed a bunch of composable function objects that allowed me to define the criteria in an almost declarative style:

const std::vector<std::pair<std::string_view,std::function<bool(std::string_view)>>> field_validator

{

{"byr", all(is_length(4),is_number,chain(as_number,is_between(1920,2002)))},

{"iyr", all(is_length(4),is_number,chain(as_number,is_between(2010,2020)))},

{"eyr", all(is_length(4),is_number,chain(as_number,is_between(2020,2030)))},

{"hgt", one_of

(

all(ends_with("cm"),chain(remove_suffix(2),is_number),chain(remove_suffix(2),as_number,is_between(150,193))),

all(ends_with("in"),chain(remove_suffix(2),is_number),chain(remove_suffix(2),as_number,is_between(59,76)))

)

},

{"ecl", is_one_of("amb","blu","brn","gry","grn","hzl","oth")},

{"pid", all(is_length(9),is_number)},

{"hcl", all(starts_with("#"), chain(remove_prefix(1),is_hexnumber))},

};The business logic ones are rather trivial and uninteresting. The ones used for composition can, however, prove educational:

constexpr auto all = [](auto... checkers) { return [=](const auto& str) { return (checkers(str) && ...); }; };

constexpr auto one_of = [](auto... checkers) { return [=](const auto& str) { return (checkers(str) || ...); }; };

constexpr auto chain = [](auto fun, auto... funs)

{

const auto chain_impl = [](auto recurse, auto fun, auto... funs)

{

if constexpr(sizeof...(funs)==0)

return fun;

else

return [=](const auto& arg) { return recurse(recurse, funs...)(fun(arg)); };

};

return chain_impl(chain_impl,fun,funs...);

};all simply takes a bunch of callables and returns a new callable that utilizes C++17’s fold expressions to call each one of the given checkers in a chain connected via &&, i.e. returning true exactly if all of the given checkers would return true.

one_of does pretty much the same thing, just with ||, i.e. or, instead of and.

chain, as the other two, takes a collection of callables and returns another callable. This one, however, is constructed in a little more complicated fashion. First, we call a helper chain_impl. This is necessary to get recursion, which is otherwise not easily available in lambda expressions. The helper does one of two things:

- If there is only one callable left, it is returned.

- Otherwise, we construct a new callable that calls the first one and passes the result on to whatever the recursion will construct for all other functions.

Effectively, given functions a, b, c and d, this will construct an expression equivalent to d(c(b(a(input)))), i.e. it chains the calls, using the result of the first as input to the second and so on(similar to the use of pipes in Unix, a | b | c | d).

Day 5

Part 1

This one was a bit more interesting and certainly educational. We were given a lovely description of how a certain airliner numbers their seats by repeatedly subdividing a range, throwing away either the lower or the upper half with each incoming letter. Whilst reminiscent of binary search, it is basically even simpler. The description mirrors exactly the classical definition of what a bit actually is: something that halves your prior uncertainty. As such, the strings proved to be nothing more than a fancy encoding for a 10-digit binary number, MSB first. With this realization, completing the task - that of finding the highest id - was trivial:

const auto seat_id=[](const std::string& str)

{

std::uint16_t id = 0;

for(auto c: str)

{

id<<=1;

if(c=='B' || c=='R')

id|=1;

}

return id;

};

std::uint16_t max_id = std::numeric_limits<std::uint16_t>::min();

for(std::string str;std::cin>>str;)

{

max_id = std::max(max_id,seat_id(str));

}Note how we cannot use max_element, as that sadly requires a forward iterator.

Part 2

Given the same list, we were now tasked with finding the one and only missing element. The list does not necessarily start or end at the lowest and highest possible id, but our missing element was declared to be somewhere in between. This could - again - be solved in more than one way, with up and downsides of runtime, memory usage or readability. First, the more readable solution, at an additional O(n) memory (for storing all ids) and O(n log n) runtime (for sorting them):

std::vector<std::uint16_t> ids;

std::transform(std::istream_iterator<std::string>{std::cin},{},std::back_inserter(ids),seat_id);

std::sort(std::begin(ids),std::end(ids));

std::cout<<(*std::adjacent_find(std::begin(ids),std::end(ids),[](auto lhs, auto rhs) { return rhs-lhs>1; })+1)<<'\n';Already pretty decent, but we can do better by knowing and abusing a tiny bit of bit magic, specifically the following lovely properties of the XOR operation:

A ^ A == 0

I.e. a number xor’ed with itself disappears. Further:

A^B == B^A

That is, the operation is commutative, the order in which it is performed does not matter. And finally:

A^0 == A

A number xor’ed with 0 does not change.

All of this can be combined to imply this:

(A^B^C^E...) ^ (A^B^C^D^E...)

will be equivalent to

(A^A) ^ (B^B) ^ (C^C) ^ (E^E) ... ^ D

meaning the only one left over that is not zeroed will be our missing element! This yields this beautifully efficient solution that no one without knowledge of the trick has any realistic hope to grasp:

std::uint16_t min=std::numeric_limits<std::uint16_t>::max(), max=std::numeric_limits<std::uint16_t>::min();

std::uint16_t xored = 0, all = 0;

std::for_each(std::istream_iterator<std::string>{std::cin},{},[&](const auto& str)

{

const auto id = seat_id(str);

min=std::min(min,id);

max=std::max(max,id);

xored^=id;

});

for(std::uint16_t i=min;i<=max;++i)

all^=i;

std::cout<<(xored^all)<<'\n';Knowing the trick, it does seem rather straightforward so and has only constant memory footprint and linear runtime, which is just fabulous ;-)

Accessibility can, however, be somewhat regained whilst not only keeping but even improving ever so slightly on this runtime by recognizing there is a simpler pair of operations with very similar properties: + and -.

A+0 = A

A-A = 0

A+B = B+A

Meaning, of course,

(A+B+C+D+E...) - (A+B+C+E...) = (A-A) + (B-B) + (C-C) + D + (E-E)... = D

Simply summing all elements and subtracting that sum from what it would be if none were missing yields exactly the one missing element! Knowing a bit of history and Gauss’s famous formula for computing the sum of all integers up to a given bound((n*n+n)/2), we can skip the second loop and directly compute the desired sum in closed form:

std::uint16_t min=std::numeric_limits<std::uint16_t>::max(), max=std::numeric_limits<std::uint16_t>::min();

int sum = 0;

std::for_each(std::istream_iterator<std::string>{std::cin},{},[&](const auto& str)

{

const auto id = seat_id(str);

min=std::min(min,id);

max=std::max(max,id);

sum+=id;

});

--min;

std::cout<<((max*max+max)/2-(min*min+min)/2-sum)<<'\n';Day 6

Part 1

Given a number of “yes-or-no-questions” - each identified by a single character - we were provided with a list of “answers”, i.e. lines containing a character if the corresponding question was answered in the affirmative and not containing it otherwise.

Those answers were separated into groups by empty lines and our first task was to count in how many groups each “question”(i.e. character) was present. We then should return the sum of those counts.

Again - as seems to be a theme in those early days - the most difficult or rather time consuming part here is parsing question and input correctly, with the actual task being simple counting. Here is how I did it:

std::array<std::size_t,256> counts{};

const auto begin = &counts['a'], end = &counts['z'+1];

const auto finish_group = [&]()

{

total+=std::count_if(begin,end,[&](auto v){ return v!=0; });

std::fill(begin,end,0);

};

while(std::getline(std::cin,line))

{

if(line.empty())

finish_group();

else

{

for(auto c: line)

++counts[c];

}

}

finish_group();Nothing much to explain here. I simply read the input, line by line, and count the frequency of all characters in an array indexed by the characters value - just like one would do when implementing counting sort. After an empty line, I finalize the group by counting how many entries were not 0 - that means they appeared at least once - and reset the counts.

Using an array to count is, of course, a bit of a waste of space, but I deemed it acceptable as the resulting code is succinct, readable and efficient. It also turned out to be useful for part 2.

Part 2

The second part did not, in fact, significantly ramp up the difficulty. Instead of counting how often each answer was given in total, we should only count those answers in a group which were given by everyone in it. As I already counted the frequency, all that was left was determining the group size:

++group_size;

for(auto c: line)

++counts[c];Followed by simply changing a “!=0” to a “==group_size”:

const auto finish_group = [&]()

{

total+=std::count(begin,end,group_size);

group_size=0;

std::fill(begin,end,0);

};It might have broken if anyone had enthusiastically answered yes more than once on the same question, which, to my great relief, was not the case.

Day 7

Part 1

Finally a graph problem! Given a number of bags, all of which containing a number of different bags, first count all that will, in one of those interior ones, contain a “shiny gold” one. Before tackling the more interesting part here, we unfortunately do have to get some annoying parsing out of the way, which - as so frequently - took the bulk of my required time.

Which bags contain how many of which other bags was given as a string of this form:

vibrant plum bags contain 5 faded blue bags, 6 dotted black bags.

Or sometimes:

faded blue bags contain no other bags.

It ought to be trivial, but I am embarrassed to say that this years problems really drove home the point that my skills in the parsing area are severely lacking and I have some serious brushing up to do. Some higher proficiency with regular expressions, for instance, might have tremendously simplified the following abomination I came up with at the time:

std::istream& operator>>(std::istream& in, rule& r)

{

r.own_name.clear();

r.can_contain_n.clear();

std::string str, last;

while(in>>str && str!="contain")

{

r.own_name+=last;

last = str+" ";

}

r.own_name.pop_back();

if(!in)

return in;

do

{

std::size_t n;

std::string name;

std::string n_str;

in>>n_str;

if(n_str=="no")

{

in>>str>>str>>str;

break;

}

n=std::stoi(n_str);

while(in>>str && str.back()!=',' && str.back()!='.')

{

name+=str+" ";

}

name.pop_back();

r.can_contain_n[name]=n;

}

while(str.back()!='.');

return in;

}Don’t look at it too closely. Great! Now that we have this, let’s return to the root of our problem. Or rather the reverse. Interpreting the “contains” relation as edges in a graph, we can have one node for each bag type and an edge to all other bags in which it might be contained:

For instance, this description:

green bags contain 2 red bags, 2 black bags.

red bags contain 1 blue bag.

blue bags contain 1 shiny gold bag, 3 black bags.

black bags contain no other bags.

shiny gold bags contain 7 gold bags.

would yield the following:

"red" <--------- "blue" <----- "shiny gold" <----- "gold"

| ^

v |

"green"<--"black"__|

Thanks to the eldritch horror above, such a reversed graph can be constructed easily, representing it as a map from node-name to list-of-children-names:

std::unordered_map<std::string,std::vector<std::string>> reverse_graph;

rule r;

while(std::cin>>r)

{

for(auto [name,n]:r.can_contain_n)

reverse_graph[name].push_back(r.own_name);

}Given that, all we need to do is walk each possible path away from our starting node (“shiny gold”) and count the total number of nodes we pass by(remembering which we have already seen and counted on the way):

std::vector<std::string> left;

std::unordered_set<std::string> seen;

left.push_back("shiny gold");

std::size_t count = 0;

while(!left.empty())

{

const auto next = left.back();

left.pop_back();

for(const auto& target: reverse_graph[next])

{

if(seen.find(target)==std::end(seen))

{

seen.insert(target);

left.push_back(target);

++count;

}

}

}Part 2

Wonderful! Now for part 2 we were tasked with doing basically the reverse. Instead of counting how many different kinds of bags can contain a shiny gold one, we are asked how many bags are contained within. This requires us to consider one more piece of information given in our data, the number telling us how often each kind of bag is contained within. Reversing the edges from part 1 and labeling them with a “cost” representing their quantity yields a graph like this one:

"red" ----1----> "blue" --1--> "shiny gold" ---7--> "gold"

^ |

2 3

| |

"green"-2->"black"<__|

Which we will, once again, simply walk recursively from our starting location, multiplying the results by the given quantities:

struct edge

{

std::string target;

std::size_t weight;

};

std::size_t number_of_interior_bags(const std::string& outer_name, const std::unordered_map<std::string,std::vector<edge>>& graph)

{

std::size_t count = 1;

auto it = graph.find(outer_name);

if(it==std::end(graph))

return count;

for(const auto& [name,n]: it->second)

count+=n*number_of_interior_bags(name,graph);

return count;

}Day 8

Part 1

The interesting emulation problems have finally started! I really loved all the intcode problems in 2019, so this was getting my hopes up!

We started out slowly so and should just fix a simple program which executes one of only three types of instructions:

- acc, adding to the accumulator

- jmp, a relative jump

- nop, my favorite thing, doing nothing

As given, the program contained an infinite loop and we were tasked with determining its state once we enter the loop, i.e. once we execute an instruction for the second time. Without further ado, lets jump right into the code.

First, we define structs for our state and instructions:

struct state

{

int accumulator = 0;

int pc = 0;

};

enum class opcode

{

acc,

jmp,

nop

};

struct instruction

{

opcode op;

int arg;

};Parsing them is thankfully easy:

std::istream& operator>>(std::istream& in, instruction& ins)

{

std::string name;

in>>name;

if(name=="acc")

{

ins.op=opcode::acc;

return in>>ins.arg;

}

else if(name=="jmp")

{

ins.op=opcode::jmp;

return in>>ins.arg;

}

else if(name=="nop")

{

ins.op=opcode::nop;

return in>>ins.arg;

}

return in;

}As is executing them, either adding to the accumulator or adding to the program counter or alternatively doing nothing at all:

void execute(const instruction& ins, state& s)

{

switch(ins.op)

{

case opcode::acc: s.accumulator+=ins.arg; break;

case opcode::jmp: s.pc+=ins.arg-1; break;

case opcode::nop: break;

}

}To solve our problem, we first read all instructions into a vector, setup a pristine state with accumulator = pc = 0 and begin executing. After each instruction we increment the program counter to determine the next one, whilst keeping track - in a simple hashset - of all positions we have encountered so far. Upon reaching one that was previously seen, we declare the cycle detected and break our loop:

std::vector<instruction> instructions(std::istream_iterator<instruction>{std::cin},{});

state s{};

std::unordered_set<int> seen;

while(seen.find(s.pc)==seen.end())

{

seen.insert(s.pc);

execute(instructions[s.pc],s);

++s.pc;

}

std::cout<<s.accumulator<<'\n';Lovely, lets move on to…

Part 2

Now that we are able to emulate our loop, we are supposed to break it. There is one single nop or jmp instruction that if flipped to the opposite will cause our program to terminate by causing the program counter to reach the end of memory.

The most trivial and perfectly sufficient idea is to simply brute force it. Iterate over every instruction and if it is a jmp or nop, toggle it and try running our program from part 1. If a loop was detected, we try the next one. If not, we have found the instruction to change:

for(auto& ins: instructions)

{

if(ins.op!=opcode::acc)

{

ins.op=ins.op==opcode::jmp?opcode::nop:opcode::jmp;

auto [loop_detected, result] = detect_loop(instructions);

if(!loop_detected)

{

std::cout<<result.accumulator<<'\n';

break;

}

ins.op=ins.op==opcode::jmp?opcode::nop:opcode::jmp;

}

}To keep it readable, I outsourced loop detection and gave it a nice, expressive return type:

struct execution_result

{

bool loop_detected = false;

state final_state;

};

execution_result detect_loop(const std::vector<instruction>& instructions)

{

state s{};

std::vector<bool> seen(instructions.size(),false);

while(s.pc>=0 && s.pc<static_cast<int>(instructions.size()) && !seen[s.pc])

{

seen[s.pc] = true;

execute(instructions[s.pc],s);

++s.pc;

}

return {s.pc>=0 && s.pc<static_cast<int>(instructions.size()), s};

}Whilst this is perfectly sufficient in our case and terminates within a small fraction of a second even on my old machines, it is not exactly ideal. Worst case, its asymptotic complexity seems quadratic. Consider this example:

nop 1

nop 1

nop 1

....

jmp -program_size

It would run over each nop, run the complete program to the very end, detect the loop and try the next one. That is, each attempt before the last executes n-1 nop and 2 jmp instructions and we need to do that n-1 times. Quite terrifying. So lets do better:

auto reaches_end = compute_end_reachability(instructions);

state s{};

bool changed_one = false;

while(s.pc>=0 && s.pc<static_cast<int>(instructions.size()))

{

if(!changed_one)

{

const auto target_pc = s.pc+instructions[s.pc].arg;

if(instructions[s.pc].op==opcode::jmp && !reaches_end[s.pc] && reaches_end[s.pc+1])

{

instructions[s.pc].op=opcode::nop;

changed_one = true;

}

else if(instructions[s.pc].op==opcode::nop && !reaches_end[s.pc] && reaches_end[target_pc])

{

instructions[s.pc].op=opcode::jmp;

changed_one = true;

}

}

execute(instructions[s.pc],s);

++s.pc;

}

std::cout<<s.accumulator<<'\n';Looks a bit more complex than our previous attempt, but the running time turns out to be linear. Allow me to first explain my reasoning:

Assuming we knew for each instruction if beginning execution from it will reach the end, we could execute our code once and simply change the first instruction we encounter that could not reach the end but could if toggled. Doing such a thing would run in linear time, as no loops are entered and each instruction is executed at most once. That is exactly what you see happening in the code above.

This, however, delegates the hard problem to figuring out which instructions can and cannot reach the end:

std::vector<std::vector<int>> compute_jump_sources(const std::vector<instruction>& instructions)

{

std::vector<std::vector<int>> jump_sources(instructions.size());

for(int pc = 0; pc<static_cast<int>(instructions.size());++pc)

{

const auto target_pc = pc+instructions[pc].arg;

if(instructions[pc].op==opcode::jmp && target_pc>=0 && target_pc<static_cast<int>(instructions.size()))

jump_sources[target_pc].push_back(pc);

}

return jump_sources;

}

std::vector<bool> compute_end_reachability(const std::vector<instruction>& instructions)

{

std::vector<bool> reaches_end(instructions.size(),false);

std::vector<bool> seen(instructions.size(),false);

std::vector<std::vector<int>> jump_sources = compute_jump_sources(instructions);

const auto mark_upward = [&](int start_pc, bool value, auto recurse) -> void

{

for(int pc = start_pc; pc>=0 && !seen[pc]; --pc)

{

const auto target_pc = pc+instructions[pc].arg;

if(instructions[pc].op==opcode::jmp)

{

if(target_pc<0 || target_pc>=static_cast<int>(instructions.size()))

value = true;

else if(seen[target_pc])

value = reaches_end[target_pc];

else

break;

}

seen[pc] = true;

reaches_end[pc] = value;

for(auto source: jump_sources[pc])

recurse(source,value,recurse);

}

};

mark_upward(instructions.size()-1,true,mark_upward);

return reaches_end;

}I am iterating over all instructions once to determine from where each instruction can be reached(compute_jump_sources). Then I iterate again and mark which instructions can reach the end. Turns out that is not quite so hard either. We have to realize the following simple facts:

- A non-jmp instruction can reach the end if it is at the end or if the one following it could reach the end

- A jmp instruction can reach the end if its target is the end or can reach the end

As such, we start with the only one we know about - the end - and work our way upwards. If its not a jump, we simply mark it with the previous result. If its a jump and we know about its destination, we continue with the destinations value. If its a jump and we don’t know about its destination, we can’t proceed and break. Furthermore, if the current instruction can be reached by a jump, we recurse and work our way upward from its source, too.

Runtime analysis of this might seem like a mess, but our seen-vector rushes in to save the day: There are at most n jump sources in total (as there are at most n jmp instructions), as such recurse can be called at most n times in total), so we have at most n loops. Each iteration of the loop either breaks or sets a value in the seen vector to true which was not set previously. As each loop can of course only break once, the total number the first can happen is n. As seen has exactly n elements and we only enter an iteration and set a value if it was not set, the total number the second can happen - over all loops - is also n.

Just lovely, isn’t it?

Day 9

Part 1

Surprisingly easy given the previous two assignments, our quest on this day was simply adding up numbers. We were given a list of them and should find the first one that cannot be represented as a sum of two of the 25 preceding ones. The more perceptive of my readers probably realized by now, that in the grand tradition of instructional spiraling, this is but a slightly more complicated variation of what we did on day 1!

As such, a trivial - yet horrific in time complexity - solution is to brute force our way through the problem with 3 ugly nested loops:

for(std::size_t i=n;i<data.size();++i)

{

bool found = false;

for(std::size_t j=i-n;j<i;++j)

{

for(std::size_t k=j+1;k<i;++k)

{

if(data[j]+data[k]==data[i])

{

found = true;

break;

}

}

if(found) break;

}

if(!found)

{

std::cout<<data[i]<<'\n';

break;

}

}Funnily enough, that is still fast enough and if we analyzed its complexity at university level, we would call it O(n), as 25 is a constant and so is 25^2. 375 * n is still O(n), so we are golden xD. Well, as you might have guessed from my somewhat snappy tone here, I don’t really like that much. We also keep all of our range in memory right now, which could be avoided.

We only need the last 25 numbers, so lets start with that:

template <typename T,std::size_t N>

class trivial_fixed_ring

{

public:

using value_type = T;

void push_back(const T& value)

{

data_[start_++] = value;

start_%=N;

}

auto& operator[](std::size_t idx)

{

return data_[(start_+idx)%N];

}

auto& operator[](std::size_t idx) const

{

return data_[(start_+idx)%N];

}

auto size() const { return N; }

private:

std::array<T,N> data_;

std::size_t start_ = 0;

};Yeah, as there is none in the standard library9, implementing a small, bad and severely broken ring buffer might be ever so slight overkill, considering the input list is a mere 1000 numbers and the approach above runs in the fraction of a fraction of a fraction of a second, but who am I if not one to always entertain the notion of severely overengineering the most trivial of tasks?

Utilizing this data structure, our solution changes to look like this:

constexpr auto n = 25;

trivial_fixed_ring<int,n> data;

std::copy_n(std::istream_iterator<int>{std::cin},n,std::back_inserter(data));

int next;

while(std::cin>>next)

{

bool found = false;

for(std::size_t i=0;i<data.size();++i)

{

for(std::size_t j=i+1;j<data.size();++j)

{

if(data[i]+data[j]==next)

{

found = true;

break;

}

}

if(found) break;

}

if(!found) break;

data.push_back(next);

}

std::cout<<next;Our theoretical runtime can be reduced by using the exact same methods employed on day 1: either via sorting or via wasting a potentially huge amount of memory.

Let’s do sorting first. We can’t sort in place, as the original order dictates which elements will be replaced in succeeding steps, which is why we have to copy them into an additional buffer first:

std::array<int,n> buffer;

std::partial_sort_copy(std::begin(data),std::end(data),std::begin(buffer),std::end(buffer));We then walk this buffer once, binary-searching for the missing element:

bool found = false;

for(auto v: buffer)

{

found = std::binary_search(std::begin(buffer),std::end(buffer),next-v);

if(found) break;

}

if(!found) break;Lovely, isn’t it? We reduced our complexity down to O(n) * O(25 log 25), which is, asymptotically speaking, completely irrelevant. If the constant were ever increased, however, it could prove useful to reduce it even further.

Last time, we had a convenient constraint on how big our numbers could get, which was completely thrown out for this task. Numbers here can - and apparently do - get arbitrarily big, so its kind of impossible to allocate a big enough array in advance. Whilst we could determine this number in linear time(by simply scanning the input once and saving max and min values), a simple bitset won’t do, as our sliding window might contain more than one copy of the same value and we have to keep track of when all of those fall out of the window, i.e. we have to count. Considering my solution was >20 million, keeping one integer for each possible one in the input constitutes unfathomable abuse of memory even for the likes of me, so I bailed out and decided on using a hashmap instead, which will - at least on average - still provide me with O(1)-ish access time:

std::unordered_map<int,int> seen;

for(auto v: data) ++seen[v];

int next;

while(std::cin>>next)

{

bool found = false;

for(auto v: data)

{

found = seen[next-v]>0;

if(found) break;

}

if(!found) break;

--seen[data[0]];

++seen[next];

data.push_back(next);

}Part 2

Surprisingly even easier than part 1 this time. Take the number we just obtained and find a contiguous set of size>2 in our data that sums to it. We can do this easily by keeping a running set, always adding the next number and - if we ever run over our target - erase from the start until we are <= to it again:

std::vector<int> data(std::istream_iterator<int>{std::cin},{});

std::size_t sum = data[0], min = 0;

for(std::size_t i=1;i<data.size();++i)

{

sum+=data[i];

while(sum>target && min<i)

{

sum-=data[min];

++min;

}

if(sum==target)

{

auto [min_it,max_it] = std::minmax_element(&data[min],&data[i]+1);

std::cout<<*min_it+*max_it<<'\n';

break;

}

}Day 10

Part 1

Travel power adapters, oh my. The cover story is a cute one, but the actual problem is rather straightforward. Given a number of “joltage” adapters output values, connectable if they are between 1 and 3 apart and the second is higher than the first, figure out how often they are apart by 1 and how often they are apart by 3 if all were connected together. Since we never can connect a higher to a lower adapter, we simply sort and count the difference between every two elements:

std::vector<int> jolts(std::istream_iterator<int>{std::cin},std::istream_iterator<int>{});

std::sort(std::begin(jolts),std::end(jolts));

std::size_t diff1 = 0, diff3 = 0;

int last = 0;

for(std::size_t i = 0;i<jolts.size(); ++i)

{

auto diff = jolts[i]-last;

if(diff==1)

++diff1;

if(diff==3)

++diff3;

last = jolts[i];

}

std::cout<<(diff1*(diff3+1))<<'\n';Part 2

That was ever so slightly more difficult. My first hunch was a simple recursive solution, counting the options:

std::size_t count_options(const std::vector<long long>& jolts, long long last_jolt, std::size_t next_idx)

{

long long begin_jolt = last_jolt;

std::size_t result = 0;

for(std::size_t i=next_idx;i<jolts.size(); ++i)

{

const auto diff = jolts[i]-last_jolt;

if(diff>3)

return 0;

if(diff<3 && i<jolts.size()-1)

result+=count_options(jolts,last_jolt,i+1,cache);

last_jolt = jolts[i];

}

return result + 1;

}Turns out that is not ideal for a number of reasons, most obvious among them is runtime and repeated work. If, for instance, we skip the first adapter and take the second and third on one path, whilst we take the first, skip the second and take the third, the total may be different, but what follows afterwards is exactly the same. Nonetheless, the code above will compute this twice. Every subpath can be reached in a multitude of ways and I recompute it every. single. time. Let’s fix that:

std::size_t count_options(const std::vector<long long>& jolts, long long last_jolt, std::size_t next_idx, std::map<std::pair<long long,std::size_t>,std::size_t>& cache)

{

if(auto it=cache.find({last_jolt,next_idx});it!=std::end(cache))

return it->second;

long long begin_jolt = last_jolt;

std::size_t result = 0;

for(std::size_t i=next_idx;i<jolts.size(); ++i)

{

const auto diff = jolts[i]-last_jolt;

if(diff>3)

return 0;

if(diff<3 && i<jolts.size()-1)

result+=count_options(jolts,last_jolt,i+1,cache);

last_jolt = jolts[i];

}

return cache[{begin_jolt,next_idx}] = result + 1;

}I employed the time honored tradition of slapping a cache onto my recursion to avoid duplicating work. This turns out to be more than fast enough for our problem and a result is available within the fraction of a second again. Nonetheless, we could have done even better, even if I failed to figure that one out myself in the heat of the moment. It was pointed out to me on the #include discord and is visible in this solution. Instead of going forward and counting what could follow, we can - true to the spirit of dynamic programming - go backward and count the pathes we have already seen. Basically the same idea, but we can save the hashmap and know ahead of time how many entries we will need.

Wonderful, but checking the lovely reddit, you will figure out there is an even better, if far more mathy10 and slightly less intuitive way running in what is apparently linear time. Turns out there is a connection to the tribonacci sequence.

That was fun and I almost got near the global leaderboard for once, rank 508, my best so far this year.)

Day 11

Part 1

Cellular automata! Quite fitting, to honor John Conway’s legacy - in the sad year of his passing - with another one of those. The cover story here is about seats and people admirably practicing social distancing. If they have too many neighbors, they move away. Given an initial state and a simple set of rules, we had to simulate the seating constellations until they reach a stable, unchanging state. Rather straightforward, so edges, corners and the fact that all cells update simultaneously always complicates issues slightly. Here is one update step:

constexpr std::array<vec2d,8> directions{{{1,0},{0,1},{1,1},{-1,0},{0,-1},{1,-1},{-1,1},{-1,-1}}};

auto next = grid;

bool changed = false;

foreach_in_square({0,0},{grid_width-1,grid_height-1},[&](auto p)

{

if(grid[p.y][p.x]=='.') return;

std::size_t adjacent_count = 0;

for(auto d: directions)

{

const auto target = p+d;

if(target.x>=0 && target.x<grid_width && target.y>=0 && target.y<grid_height)

adjacent_count+=(grid[target.y][target.x]=='#');

}

if(grid[p.y][p.x]=='L' && adjacent_count==0)

{

next[p.y][p.x] = '#';

changed = true;

}

else if(grid[p.y][p.x]=='#' && adjacent_count>=4)

{

next[p.y][p.x] = 'L';

changed = true;

}

});

grid = next;We create a copy of all cells, and iterate over all coordinates. For each of those we iterate over all valid neighbors and count how many of them are occupied, setting the new value in our copy according to the given rules. We can’t set it in our current grid, as all cells should act as if they updated simultaneously and modifying the grid step by step would change results for later processed cells which contain already updated ones as neighbors.

Part 2

The difficulty was increased ever so slightly by not only considering direct neighbors but everything within ones line of sight. Nothing a simple loop can’t solve, thankfully:

for(auto d: directions)

{

auto target = p+d;

while(target.x>=0 && target.x<grid_width && target.y>=0 && target.y<grid_height)

{

if(grid[target.y][target.x]=='#')

{

++adjacent_count;

break;

}

if(grid[target.y][target.x]=='L')

break;

target+=d;

}

}Day 12

Part 1

Given a number of instructions for moving a ship around on a simple 2 dimensional coordinate system (but with cardinal directions), we had to correctly determine which position we end up in. The minor difficulty arises from some directions not being absolute, but relative to the ships current orientation, which we also have to keep track of. Let’s not get ahead of ourselves and first declare the possible instructions and define how they are parsed:

enum class type

{

turn_left,

turn_right,

move_forward,

move

};

struct instruction

{

type t;

int step;

vec2d direction;

};

std::istream& operator>>(std::istream& in, instruction& ins)

{

char c;

in>>c;

in>>ins.step;

switch(c)

{

case 'N': ins.t=type::move; ins.direction={0,-1}; break;

case 'E': ins.t=type::move; ins.direction={1,0}; break;

case 'W': ins.t=type::move; ins.direction={-1,0}; break;

case 'S': ins.t=type::move; ins.direction={0,1}; break;

case 'F': ins.t=type::move_forward; break;

case 'R': ins.t=type::turn_right; ins.step/=90; break;

case 'L': ins.t=type::turn_left; ins.step/=90; break;

default: break;

}

return in;

}I already did some minimal preprocessing here. For one, I chose to save the directions as 2d vectors for easier addition.

Furthermore, I noticed all turns happened in multiples of 90 degree, so instead of saving the angles, I remembered how many 90 degree turns they correspond to. 90 degree turns are easy:

vec2d turn_left(vec2d old)

{

std::swap(old.x,old.y);

old.y=-old.y;

return old;

}

vec2d turn_right(vec2d old)

{

std::swap(old.x,old.y);

old.x=-old.x;

return old;

}Whilst it could maybe have been solved more elegantly with complex multiplication, this works perfectly fine for now so and I really like the clarity of this code which is readable without much of a mathematical background (although it’s always nice to have that nonetheless).

Given this scaffolding, all that is left is iterating over the instructions and modifying either position or direction accordingly:

std::for_each(std::istream_iterator<instruction>{std::cin},{},[&](auto ins)

{

switch(ins.t)

{

case type::move: pos+=ins.direction*ins.step; break;

case type::turn_left: for(int i=0;i<ins.step;++i) current_direction = turn_left(current_direction); break;

case type::turn_right: for(int i=0;i<ins.step;++i) current_direction = turn_right(current_direction); break;

case type::move_forward: pos+=current_direction*ins.step; break;

}

});Part 2

Part 2 on this day was nearly identical, only the “move”-instructions were changed to not move the ship directly but modify its movement vector instead, which was applied on the “move-forward” command. The turn commands were also changed to turn the movement vector. Again, it could have been simplified with complex numbers, but worked out fine with my trivialized approach, the only change being one case of instruction handling:

switch(ins.t)

{

case type::move: waypoint+=ins.direction*ins.step; break;

case type::turn_left: for(int i=0;i<ins.step;++i) waypoint = turn_left(waypoint); break;

case type::turn_right: for(int i=0;i<ins.step;++i) waypoint = turn_right(waypoint); break;

case type::move_forward: pos+=waypoint*ins.step; break;

}Day 13

Part 1

Given a starting time and a number of bus ids - which also represented their round trip time - we were tasked with finding the first bus to arrive at or after the starting time. For this one, all busses started out at 0, meaning we only had determine the first multiple of each id >= the starting time and choose the minimum of those. To do so, we compute the ceil(i.e. rounded up) result of dividing the starting time by id and subsequently multiply id by that result:

long min_time = std::numeric_limits<long>::max();

long best_id = 0;

for(auto id:ids)

{

auto next_factor = first_timestamp/id + (first_timestamp%id!=0);

auto ts = next_factor * id;

if(ts<min_time)

{

best_id = id;

min_time = ts;

}

}Part 2

It really pays off to know a tiny bit of linear algebra from time to time ;-) Without it, this one would be really, really hard.

Given not only the periods, but a desired offset for each id, we had to determine the first time satisfying for which each bus arrived exactly its offset later. In other words, we had to solve a series of equations of the form

x = -a mod id0

x = -b mod id1

x = -c mod id2...

That is a case for the famous Chinese remainder theorem!

As such, we first need a way to compute modular multiplicative inverses, which can be done with the extended euclidean algorithm:

auto multiplicative_inverse(long long a, long long b)

{

auto old_r = a;

auto r = b;

long long old_s = 1;

long long s = 0;

while(r!=0)

{

auto quotient = old_r/r;

auto new_r = old_r-quotient*r;

auto new_s = old_s-quotient*s;

std::tie(old_r,r) = std::tie(r,new_r);

std::tie(old_s,s) = std::tie(s,new_s);

}

while(old_s<0)

old_s+=b;

return old_s;

}For each id, we compute the modular inverse of it with the product of all others and multiply it with that product and the desired offset. Our result is the sum of doing that for each id, modulo the product of all ids. Unfortunately, the offsets are negative in our case and modulo with negative numbers is - ummm - let’s say special in C++, so I used an expensive loop instead:

long long result = 0;

for(auto id:ids)

{

const auto mod = mod_product/id.mod;

const auto inverse = multiplicative_inverse(mod,id.mod);

auto off = -id.offset;

while(off<0) off+=id.mod;

result+=off*mod*inverse;

result%=mod_product;

}Day 14

Part 1

This time, we were supposed to trace modified writes to a special memory. Whenever a value is written, it is first modified based on a simple bitmap by either setting the bits to 0 or 1 or alternatively, leaving them as they were in case of an X. For example, given the following input:

mask = XXXXXXXXXXXXXXXXXXXXXXXXXXXXX1XXXX0X

mem[8] = 11

memory at address 8 should obtain the value 1011 (i.e. binary 11), but adding a 1 bit at the 7th position and changing the 1 at the second one to a zero: 1001001 (which is 73 in decimal).

My implementation simply constructed two masks, one to &(representing the X’s) and one to |(representing everything else), for those operations:

const auto update_mask = [&](const std::string& new_mask)

{

mask_and = mask_or = 0;

std::size_t shift = 0;

for(auto it = new_mask.rbegin();it!=new_mask.rend();++it, ++shift)

{

mask_and |= (*it=='X'?1ll:0ll)<<shift;

mask_or |= (*it=='1'?1ll:0ll)<<shift;

}

};Which were then applied to modify assignments:

memory[addr] = (value&mask_and) | mask_or;

As the address range was 36 bits and as such incredibly huge, whilst only a small number of cells could actually be used, I chose to represent it as a hashmap:

std::unordered_map<std::uint64_t, std::uint64_t> memory;Part 2

Same input, same mask, but very different interpretation. Now an X does not mean the given value, but any possible one, meaning we will have to iterate over all combinations. Furthermore, 0 means to leave the input untouched(the role previously occupied by X), whilst we modify the address written to, not the value. As a first step, we now create 3 masks(one to set to 1, one to leave untouched and one to try all combinations on):

const auto update_mask = [&](const std::string& new_mask)

{

mask_and = mask_or = mask_float = 0;

std::size_t shift = 0;

for(auto it = new_mask.rbegin();it!=new_mask.rend();++it, ++shift)

{

mask_and |= (*it=='X'?0ull:1ull)<<shift;

mask_or |= (*it=='1'?1ull:0ull)<<shift;

}

mask_float = (~mask_and)&((1ull<<36)-1);

};Listing all options might seem complicated at first, but luckily for us, our languages and computers already have a beautiful system to iterate over all combinations of 0 and 1 for a given number of those strung together: counting! So we do just that and simply shift the relevant bits into their desired places afterwards:

const auto foreach_address = [&](std::uint64_t addr, auto fun)

{

addr = (addr&mask_and) | mask_or;

std::uint64_t set_bits = ptl::popcount(mask_float);

for(std::uint64_t i=0;i<(1ull<<set_bits);++i)

{

auto addr_cpy = addr;

auto mask_cpy = mask_float;

for(std::size_t j=0;j<set_bits;++j)

{

const auto bitpos = ptl::countr_zero(mask_cpy);

mask_cpy&=~(1ull<<bitpos);

addr_cpy|=((i>>j)&1ull)<<bitpos;

}

fun(addr_cpy);

}

};To count bits and determine bit positions, I would have loved to use standard functions, but the bit header is only available from C++20 onwards and I was using C++17 only here. As such, I substituted my own inferior version created for my chess engine, paulchen332.

Day 15

Part 1

Let’s play a number game. We start of by reading a bunch of given numbers and remembering the previous two times they were said. Now, after they were read once, the next number depends on the previous one. If it was spoken for the first time, we speak 0. Otherwise, we speak the difference between the last two times it was spoken. That is all. Our task was to determine the 2020th number spoken. Rather cryptic instructions to read and it took me a few minutes to comprehend what was asked of us, but once that fog had cleared, a solution is rather trivial. First define what we have to remember for each spoken number and a hashmap storing this piece of information:

struct diff

{

std::size_t prev = 0, last = 0;

};

std::unordered_map<int,diff> last_spoken;And then simply simulate the process exactly as given:

int last = 0;

for(std::size_t i=0;i<2020;++i)

{

if(i<numbers.size())

{

last = numbers[i];

last_spoken[numbers[i]] = {i,i};

}

else

{

const auto& data = last_spoken[last];

const auto dist = data.last-data.prev;

last = dist;

if(auto it = last_spoken.find(dist); it==std::end(last_spoken))

{

auto& to_change_data = last_spoken[dist];

to_change_data.prev = i;

to_change_data.last = i;

}

else

{

auto& to_change_data = last_spoken[dist];

to_change_data.prev = to_change_data.last;

to_change_data.last = i;

}

}

}Part 2

Nothing changed, we are just asked for a significantly higher number of turns. Seems difficult at first, but I took the lucky shot and tested if my previous solution worked fast enough and it turns out it completed within 8 seconds with a correct result, jumping in leaderboard position from 1700-ish to 500-ish(far from global again, of course). Nonetheless, I was certain we can do better here.

The first trick I could think of, as it appeared occasionally in problems of this kind, is detecting cycles, so I tried this and it turns out, at least for my numbers and my bound, there was no cycle ;_; So we had to get smarter than that… aaand after far too much time spent on my own attempts I began to google and figured out that:

- Given the right starting conditions it is actually a named sequence with an entry in the OEOIS

- There is a numberphile video about it, which I highly recommend watching. And - of course:

- There is no known way to get at the entries faster than brute force. So anything that is asymptotically better than what I wrote for part 1 would be a minor mathematical breakthrough ;-)

As such, all I could do was optimize what I was already doing. The first and most obvious choice is cutting down on unnecessary allocations, by preallocating enough elements into my hashmap:

last_spoken.reserve(30000000);This cut the runtime down to 5 seconds, which is quite a decent improvement for one line. Switching from a hashmap to a simple vector of 30000000 elements brought another similar improvement, getting the time down to just 2 seconds, switching the type saved from a wasteful std::size_t to int reduced it to 1.5 seconds. In the process, I also made my code look significantly more compact and beautiful than the horrid mess I showed you for part 1:

for(;i<30000000;++i)

{

const auto& data = last_spoken[last];

const auto dist = data.last-data.prev;

last = dist;

auto& to_change_data = last_spoken[dist];

to_change_data.prev = to_change_data.seen?to_change_data.last:i;

to_change_data.last = i;

to_change_data.seen = true;

}Hacking around a tiny bit more to get a more compact memory layout by stealing a bit from “last” instead of having a separate variable for “seen” got the time down to about 1 second, at which point I stopped bothering.

Day 16

Part 1

More data validation, yeahy! *sigh*

We have a bunch of tickets with a number of fields, all identified in a language we do not understand. Luckily for us, we also know which fields have to follow which rules, so we might have a shot at ordering them. But first, let’s make sure our data is valid by identifying fields that cannot be valid for any rule and count them.

Rules are given in the form

class: 1-3 or 5-7

which implies all entries of the “class” type have to be between 1 and 3(inclusive) or 5 and 7. It’s always exactly two non-overlapping ranges and the second always starts after the first. So here is how I represented and parsed the rulesets:

struct inclusive_range

{

int start, end;

};

struct rule

{

std::string name;

inclusive_range lower, higher;

};

std::istream& operator>>(std::istream& in, rule& r)

{

r.name.clear();

std::string part;

while(in>>part && part.back()!=':')

{

r.name+=part+' ';

}

r.name+=part;

r.name.pop_back();

char skip_c;

in>>r.lower.start>>skip_c>>r.lower.end;

in>>part;

in>>r.higher.start>>skip_c>>r.higher.end;

return in;

}With the parsing out of the way, as not exactly infrequently, the real task is just a form of counting:

const auto error_rate = std::accumulate(std::begin(tickets),std::end(tickets),0,[&](const auto total, const auto t)

{

int sum = 0;

for(auto e:t.entries)

{

bool valid = false;

for(const auto& r:rules)

{

if(e>=r.lower.start && e<=r.lower.end)

valid = true;

if(e>=r.higher.start && e<=r.higher.end)

valid = true;

}

if(!valid)

sum+=e;

}

return total + sum;

});Part 2

Alright, now we get to the ever so slightly more interesting part: Identifying which column belongs to which field. The simplest way to do this is checking all options. The somewhat more clever one I could think of is to determine the first rule by finding one that can only be valid for one column. Afterwards, rinse and repeat:

First, we setup variables to keep track of which rules and fields we have already identified:

std::vector<std::optional<std::size_t>> found_target_entry;

found_target_entry.resize(rules.size());

std::vector<std::optional<std::size_t>> found_target_rule;

found_target_rule.resize(rules.size());

std::size_t rules_found_count = 0;Determining if one and only one previously unassigned entry fits a given rule is done via this lovely lambda:

const auto determine_unique_entry_for = [&](int rule_id)

{

std::optional<std::size_t> entry_n;

for(std::size_t j=0;j<tickets[0].entries.size();++j)

{

if(found_target_rule[j])

continue;

const auto valid = std::all_of(std::begin(tickets),std::end(tickets),[&](const auto& t)

{

return is_valid(t.entries[j],rules[rule_id]);

});

if(valid)

{

if(entry_n)

{

entry_n = std::nullopt;

break;

}

else

entry_n = j;

}

}

return entry_n;

};It checks all, skipping over all assigned ones and checks validity. If no option or more than one valid option is found, we return nullopt, otherwise we return the index.

We then repeat it for all rules until everything becomes clear:

while(rules_found_count<rules.size())

{

for(std::size_t i=0;i<rules.size();++i)

{

if(!found_target_entry[i])

{

if(auto entry_n = determine_unique_entry_for(i); entry_n)

{

found_target_entry[i] = *entry_n;

found_target_rule[*entry_n] = i;

++rules_found_count;

break;

}

}

}

}And that’s it for the day. It would - of course - fail spectacularly if more than one valid solution exited ;-)

Whilst I am not generally too big a fan of the validation type puzzles - as they really mostly test if you are capable of correctly reading instructions and data - the second part today was amazing and a lot of fun!

Day 17

Part 1

We already had one Game of Life type puzzle this year, so seeing this one did not excite me too much at first. But in this tragic year it seemed needed and - reiterating the idea of spiraling - this one did take the previous one up to 11. Or rather 3 and eventually 4.

We start of with an infinite 3 dimensional “Conway Cube” with only one slight slice initialized and are supposed to extrapolate its growth.

For this first task, I chose the probably worst possible representation of an expanding, very sparsely packed three dimensional grid possible: a vector of vector of string.

using layer = std::vector<std::string>;

std::vector<layer> grid3d;Doing it like this made the update step incredibly unwieldy and tedious, as I had to take care to add two layers and resize every layer by 2, whilst keeping track of shifted indices and different bounds for the updated copy:

const auto update_grid = [&]()

{

std::vector<layer> ret_val;

for(int z=-1;z<static_cast<int>(grid3d.size()+1);++z)

{

ret_val.push_back({});

ret_val.back().resize(grid_height+2);

for(auto& row: ret_val.back())

row.resize(grid_width+2);

foreach_in_square({-1,-1},{grid_width,grid_height},[&](auto p2)

{

auto p3 = point3d{p2.x,p2.y,z};

std::size_t count = 0;

for(auto v: directions)

{

if(auto target = p3+v; valid_in_original(target))

count += grid3d[target.z][target.y][target.x]=='#';

}

if(valid_in_original(p3) && grid3d[p3.z][p3.y][p3.x]=='#')

ret_val.back()[p3.y+1][p3.x+1] = (count==2 || count==3)?'#':'.';

else

ret_val.back()[p3.y+1][p3.x+1] = (count==3)?'#':'.';

});

}

grid_width+=2;

grid_height+=2;

return ret_val;

};Certainly not the best code I have ever written, if you care for your eyes you should avert them.

Part 2

You thought 3 dimensions was fun? Let’s add another one and consider 4D hypercubes. My first surprisingly working solution simply extended the approach above logically. Instead of a vector of vector of string, I kept a vector of vector of vector of string and proceeded similarly to above, just one more nesting deeper. Quite the abomination, too horrendous to ever share with any living thing. I did, however, eventually see the error of my ways and recognized the problem description clearly screams for generalization of n dimensions, so generalize is what I did.

First of all, I needed arbitrarily dimensional vector and point structs

template <std::size_t dim>

struct vec

{

std::array<int,dim> coordinate;

};

template <std::size_t dim>

struct point

{

std::array<int,dim> coordinate;

};with appropriate operator overloads like this:

template <std::size_t dim>

constexpr point<dim>& operator+=(point<dim>& lhs, const vec<dim>& rhs) noexcept

{

for(std::size_t i=0;i<dim;++i)

lhs.coordinate[i]+=rhs.coordinate[i];

return lhs;

}Computing vectors to all neighbors can be done with some simple recursion:

template <std::size_t dim>

constexpr auto neighbor_vectors() noexcept

{

std::array<vec<dim>,pow(3,dim)-1> ret_val;

std::size_t count = 0;

const auto recursive_fill = [&](vec<dim> current, std::size_t coord, auto recurse)

{

if(coord==dim)

{

if(!std::all_of(std::begin(current.coordinate),std::end(current.coordinate),[](auto v){ return v==0; }))

ret_val[count++]=current;

return;

}

for(int v=-1;v<2;++v)

{

current.coordinate[coord]=v;

recurse(current, coord+1, recurse);

}

};

recursive_fill({},0,recursive_fill);

return ret_val;

}Given all that, I needed a better, less wasteful representation of the grid/cube/hypercube/whatever, i.e. of infinite space in arbitrary dimensions. The one I chose was a simple set of active/occupied cells:

constexpr auto dim = 4;

std::set<point<dim>> cells;An unordered_set, i.e. a hashset, might have been more efficient than this tree based one, but I really did not know how to define a good hash function for an arbitrarily large collection of integers, whilst a operator< is very natural.

The update function becomes much better than in part 1, even bearable to look at:

const auto update_cells = [&]()

{